. Filter duplicate rows from flat files in BIRT

You have a CSV file that involves too many duplicates. You have to remove the duplicates based on specific columns. For eg:

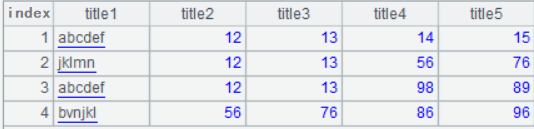

csvfile1:

title1 title2 title3 title4 title5

abcdef 12 13 14 15

jklmn 12 13 56 76

abcdef 12 13 98 89

bvnjkl 56 76 86 96

Now, based on title1, title2 and title3 you have to remove duplicates and add the unique entries in a new data set for BIRT. As you can see abcdef row is not unique and repeats based on title1,title2, and title3 so it should be removed and the output should look like:

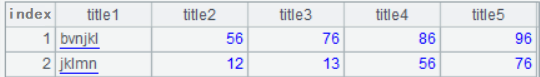

Expected Output:

title1 title2 title3 title4 title5

jklmn 12 13 56 76

bvnjkl 56 76 86 96

In BIRT, Suppress Duplicates just makes sure the value in the column is not repeated. This function doesn’t work when there are those many rows with the same values.

If you were hitting a database, you can use something like ‘select distinct/unique’ in your SQL to return only a single row. But it seems in BIRT you don’t have that luxury for flat files.

Solution

Using esProc as Data Source:

Here is the SPL Script:

A |

|

1 |

=file("d:\\source.csv").import@t() |

2 |

=A1.group(title1,title2,title3).select(~.len()==1).conj() |

3 |

return A2 |

A1: Read in the content of source.csv

A2: Group records by the first 3 fields, find single-member groups and concatenate records in the selected groups.

A3: Return the data set to BIRT.

The report can be designed in the same way as you would if you were retrieving the data from a database. For detail SPL integration with BIRT, see How to Call an SPL Script in BIRT.

For many difficult calculations of Text Computing, you can refer to Structured Text Computing.

If you have any questions or comments, please leave them below.

SPL Official Website 👉 http://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc

SPL Learning Material 👉 http://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/ydhVnFH9

Youtube 👉 https://www.youtube.com/@esProc_SPL