Data Grouping in Python

Grouping records by column(s) is a common need of data analyses. Such scenarios include counting employees in each department of a company, calculating average salary of male and female employees respectively in each department, and calculating average salary of employees in different ages. Pandas has groupby function to be able to handle most of the grouping tasks conveniently. But there are certain tasks that the function finds it hard to manage. Here let’s examine these “difficult” tasks and try to give alternative solutions.

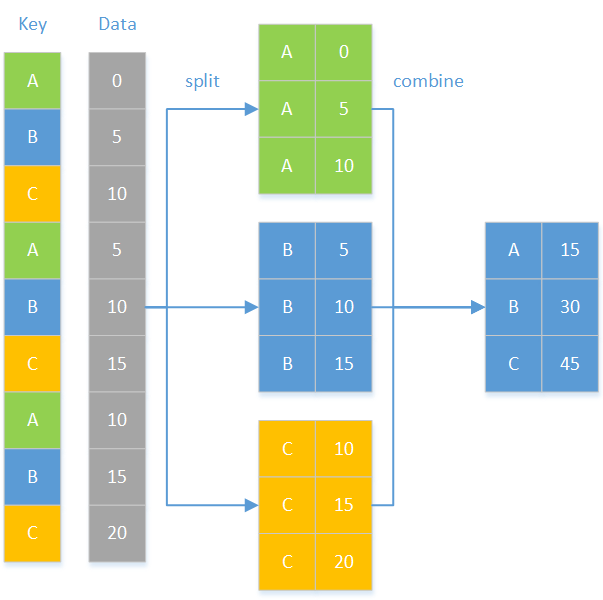

groupby is one of the most important Pandas functions. It is used to group and summarize records according to the split-apply-combine strategy. The following diagram shows the workflow:

I Grouping & aggregation by a single field

You group records by a certain field and then perform aggregate over each group.

This is the simplest use of the above strategy. To count employees in each department based on employee information, for instance:

Problem analysis: Use department as the key, group records by it and count the records in each group.

Below is part of the employee information:

EID |

NAME |

SURNAME |

GENDER |

STATE |

BIRTHDAY |

HIREDATE |

DEPT |

SALARY |

1 |

Rebecca |

Moore |

F |

California |

1974/11/20 |

2005/3/11 |

R&D |

7000 |

2 |

Ashley |

Wilson |

F |

New York |

1980/7/19 |

2008/3/16 |

Finance |

11000 |

3 |

Rachel |

Johnson |

F |

New Mexico |

1970/12/17 |

2010/12/1 |

Sales |

9000 |

4 |

Emily |

Smith |

F |

Texas |

1985/3/7 |

2006/8/15 |

HR |

7000 |

5 |

Ashley |

Smith |

F |

Texas |

1975/5/13 |

2004/7/30 |

R&D |

16000 |

… |

… |

… |

… |

… |

… |

… |

… |

… |

Python script:

import pandas as pd employee = pd.read_csv("Employees.csv") dept_emp_num = employee.groupby('DEPT')['DEPT'].count() print(dept_emp_num) |

Import data Grouping and perform count over each group |

Explanation: groupby(‘DEPT’) groups records by department, and count() calculates the number of employees in each group.

II Grouping & aggregation by multiple fields

You group records by multiple fields and then perform aggregate over each group.

We handle it in a similar way. To calculate the average salary for both male and female employees in each department based on the same employee information in the previous instance.

Problem analysis: There are two grouping keys, department and gender. We treat thea composite key as a whole to perform grouping and aggregate.

Python script:

import pandas as pd employee = pd.read_csv("Employees.csv") dept_gender_salary = employee.groupby(['DEPT','GENDER'],as_index=False).SALARY.mean() print(dept_gender_salary) |

Group by two keys and then summarize each group |

Explanation: The expression groupby(['DEPT','GENDER']) takes the two grouping fields as parameters in the form of a list. The expression as_index specifies whether to use the grouping fields as the index using True or False (Here False means not using them as the index). The mean() function calculates the average salary.

III Grouping & aggregation by a computed column

The grouping key is not explicit data and needs to be calculated according to the existing data. Such a key is called computed column. To calculate the average salary for employees of different years, for instance:

Problem analysis: There isn’t a years column in the employee information. We need to calculate it according to the employees’ birthdays, group records by the calculated column, and calculate the average salary.

Python script:

import pandas as pd import numpy as np employee = pd.read_csv("Employees.csv") employee['BIRTHDAY']=pd.to_datetime(employee['BIRTHDAY']) years_salary = employee.groupby(np.floor((employee['BIRTHDAY'].dt.year-1900)/10)).SALARY.mean() print(years_salary) |

Convert the BIRTHDAY column into date format

Calculate an array of calculated column values, group records by them, and calculate the average salary |

Explanation: Since the years values don’t exist in the original data, Python uses np.floor((employee['BIRTHDAY'].dt.year-1900)/10) to calculate the years column, groups the records by the new column and calculate the average salary.

IV Multiple aggregates

You perform one type of aggregate operation over each of multiple columns or several types of aggregates over one or more columns.

1. One aggregate on each of multiple columns

You perform one type of aggregate on each of multiple columns. To count the employees and calculate the average salary in every department, for example:

Problem analysis: The count aggregate is on EID column, and the average aggregate is over the salary column. Each column has its own one aggregate.

Python script:

import pandas as pd employee = pd.read_csv("Employees.csv") dept_agg = employee.groupby('DEPT',as_index=False).agg({'EID':'count','SALARY':'mean'}) print(dept_agg.rename(columns={'EID':'NUM','SALARY':'AVG_SALARY'})) |

Group records by DEPT, perform count on EID and average on SALARY Rename the columns |

Explanation: Pandas agg() function can be used to handle this type of computing tasks. Relevant columns and the involved aggregate operations are passed into the function in the form of dictionary, where the columns are keys and the aggregates are values, to get the aggregation done.

2. Multiple aggregates on one column

You perform more than one type of aggregate on a single column. For the previous task, we can also sum the salary and then calculate the average. This way we perform two aggregates, count and average, on the salary column.

Python script:

import pandas as pd employee = pd.read_csv("Employees.csv") dept_agg = employee.groupby('DEPT').SALARY.agg(['count','mean']).reset_index() print(dept_agg.rename(columns={'count':'NUM','mean':'AVG_SALARY'})) |

Perform count and then average on SALARY column Rename columns |

Explanation: We can combine the aggregate operations as a list and take it as the parameter to pass to the agg() function.

3. Multiple aggregates over multiple columns

You summarize multiple columns during which there are multiple aggregates on a single column. The aggregate operation can be user-defined.

To get the number of employees, the average salary and the largest age in each department, for instance:

Problem analysis: Counting the number of employees and calculating the average salary are operations on the SALARY column (multiple aggregates on one column). Finding the largest age needs a user-defined operation on BIRTHDAY column.

Python script:

import pandas as pd import datetime def max_age(s): today = datetime. datetime.today().year age = today-s.dt.year return age.max() employee = pd.read_csv("Employees.csv") employee['BIRTHDAY']=pd.to_datetime(employee['BIRTHDAY']) dept_agg = employee.groupby('DEPT').agg({'SALARY':['count','mean'],'BIRTHDAY':max_age})

dept_agg.columns = ['NUM','AVG_SALARY','MAX_AGE'] print(dept_agg.reset_index()) |

The user-defined function for getting the largest age Year Get ages

Group records by DEPT, perform count and average on SALARY, and use the user-defined max_age function to get the largest age Rename columns

|

Explanation: Columns to be summarized and the aggregate operations are passed through parameters to the function in the form of dictionary. For a column requiring multiple aggregate operations, we need to combine the operations as a list to be used as the dictionary value.

V Copying the grouping & aggregate results

You extend each of the aggregate results to the length of the corresponding group. This is equivalent to copying an aggregate result to all rows in its group. To add a new column containing average salary of each department to the employee information, for instance:

Problem analysis: Group records by department, calculate the average salary in each department, and populate each average value to the corresponding group while maintaining the original order.

Python script:

import pandas as pd employee = pd.read_csv("Employees.csv") employee['AVG_SALARY'] =employee.groupby('DEPT').SALARY.transform('mean') print(employee) |

Group records by DEPT and calculate average on SLARY |

Explanation: Group records by department and calculate average salary in each group. transform() function calculates aggregate on each group, returns the result and populates it to all rows in the order of the original index. That makes sure that the records maintain the original order.

VI Handling grouping subsets

You perform one or more non-aggregate operations on each group. To sort each group, for example, we are concerned with the order of the records instead of an aggregate. To sort records in each department by hire date in ascending order, for example:

Problem analysis: Group records by department, and loop through each group to order records by hire date.

Python script:

import pandas as pd employee = pd.read_csv("Employees.csv") employee['HIREDATE']=pd.to_datetime(employee['HIREDATE']) employee_new =employee.groupby('DEPT',as_index=False).apply(lambda x:x.sort_values('HIREDATE')).reset_index(drop=True) print(employee_new) |

Modify hire date format

Group records by DEPT, sort each group by HIREDATE, and reset the index

|

Explanation: To sort records in each group, we can use the combination of apply()function and lambda. The lambda expression loops through groups to sort records in each group using sort_values() function, and returns the sorting result.

There are more complicated computing goals. To find the difference between salary of the eldest employee and that of the youngest employee in each department, for instance:

Problem analysis: Group records by department, locate the eldest employee record and the youngest employee record, and calculate their salary difference.

Python script:

import pandas as pd def salary_diff(g): max_age = g['BIRTHDAY'].idxmin() min_age = g['BIRTHDAY'].idxmax() diff = g.loc[max_age]['SALARY']-g.loc[min_age]['SALARY'] return diff employee = pd.read_csv("Employees.csv") employee['BIRTHDAY']=pd.to_datetime(employee['BIRTHDAY']) salary_diff = employee.groupby('DEPT').apply(salary_diff) print(salary_diff) |

salary_diff(g) function calculates the salary difference over each group The index of the eldest employee record The index of the youngest employee record Calculate the salary difference

Group by DEPT and use a user-defined function to get the salary difference

|

Explanation: The script uses apply()and a user-defined function to get the target. apply() passes the grouping result to the user-defined function as a parameter. Parameter g in the user-defined function salary_diff() is essentially a data frame of Pandas DataFrame format, which is the grouping result here. The script gets the index of the eldest employee record and that of the youngest employee record over the parameter and then calculate the difference on salary field.

Summary:

Mastering Pandas groupby methods are particularly helpful in dealing with data analysis tasks.

Let’s take a further look at the use of Pandas groupby though real-world problems pulled from Stack Overflow.

VII Position-based grouping

You group records by their positions, that is, using positions as the key, instead of by a certain field. Such a scenario includes putting every three rows to same group, and placing rows at odd positions to a group and those at even positions to the other group. Below is an example:

source: https://stackoverflow.com/questions/59110612/pandas-groupby-mode-every-n-rows

Below is part of the source data:

time a b

0 0.5 -2.0

1 0.5 -2.0

2 0.1 -1.0

3 0.1 -1.0

4 0.1 -1.0

5 0.5 -1.0

6 0.5 -1.0

7 0.5 -3.0

8 0.5 -1.0

We want to group and combine data every three rows, and keep the mode in each column in each group. The expected result is as follows:

time a b

2 0.5 -2.0

5 0.1 -1.0

8 0.5 -1.0

Problem analysis: This grouping task has nothing to do with column values but involve positions. We perform integer multiplications by position to get a calculated column and use it as the grouping condition.

Python script:

import pandas as pd import numpy as np data = pd.read_csv("group3.txt",sep='\t') res = data.groupby(np.arange(len(data)) // 3).agg(lambda x: x.mode().iloc[-1]) print(res) |

Group records by the calculated column, calculate modes through the cooperation of agg function and lambda, and get the last mode of each column to be used as the final value in each group |

Explanation: The expression np.arange(len(data)) // 3 generates a calculated column, whose values are [0 0 0 1 1 1 2 2 2]. The script uses it as the key to group data every three rows. The expression agg(lambda x: x.mode()) gets the mode from each column in every group. In the first group the modes in time column is [0,1,2], and the modes in a and b columns are [0.5] and [-2.0] respectively. The script then uses iloc[-1] to get their last modes to use as the final column values.

VIII Grouping by changed value

You group ordered data according to whether a value in a certain field is changed. That is, a new group will be created each time a new value appears. Here’s an example:

Source: https://stackoverflow.com/questions/41620920/groupby-conditional-sum-of-adjacent-rows-pandas

Below is part of the original data:

duration location user

0 10 house A

1 5 house A

2 5 gym A

3 4 gym B

4 10 shop B

5 4 gym B

6 6 gym B

After data is grouped by user, sum duration values whose location values are continuously the same, and perform the next sum on duration when location value changes. Below is the expected result:

duration location user

15 house A

5 gym A

4 gym B

10 shop B

10 gym B

Problem analysis: Order is import for location column. Records with continuously same location values are put into same group, and a record is put into another group once the value is changed. When user is B, location values in row 4 (whose index is 3) are [gym,shop,gym,gym]. Here we shouldn’t just put three same gyms into one group but should put the first gym in a separate group, because the location value after the first gym is shop, which is a different value. Shop should be put another separate group. And then the other two gyms should be in same group because they are continuously same. So the grouping result for user B should be [[gym],[shop],[gym,gym]]. That’s why we can’t use df.groupby(['user','location']).duration.sum() to get the result. Instead we need a calculated column to be used as the grouping condition.

They Python script:

import pandas as pd df = pd.DataFrame({'user' : ['A', 'A', 'A', 'B', 'B', 'B','B'], 'location' : ['house','house','gym','gym','shop','gym','gym'], 'duration':[10,5,5,4,10,4,6]}) derive = (df.location != df.location.shift()).cumsum() res = df.groupby(['user', 'location', derive], as_index=False, sort=False)['duration'].sum() print(res) |

Generate data for computation

Create a calculated column Group records by user, location and the calculated column, and then sum duration values

|

Explanation: The calculated column derive gets its values by accumulating location values before each time they are changed. The cumulated values are [1 1 2 2 3 4 4]. Then group the original data by user, location and the calculated array, and perform sum on duration.

IX Grouping by a condition

You create a new group whenever the value of a certain field meets the specified condition when grouping ordered data. Below is an example:

Source: https://stackoverflow.com/questions/62461647/choose-random-rows-in-pandas-datafram

Below is part of the original data:

ID code

333_c_132 x

333_c_132 n06

333_c_132 n36

333_c_132 n60

333_c_132 n72

333_c_132 n84

333_c_132 n96

333_c_132 n108

333_c_132 n120

999_c_133 x

999_c_133 n06

999_c_133 n12

999_c_133 n24

998_c_134 x

998_c_134 n06

998_c_134 n12

998_c_134 n18

998_c_134 n36

997_c_135 x

997_c_135 n06

997_c_135 n12

997_c_135 n24

997_c_135 n36

996_c_136 x

996_c_136 n06

996_c_136 n12

996_c_136 n18

996_c_136 n24

996_c_136 n36

995_c_137 x

We want to get a random row between every two x values in code column.

The expected result is as follows:

333_c_132 n06

999_c_133 n12

998_c_134 n18

997_c_135 n36

996_c_136 n18

Problem analysis: To get a row from two x values randomly, we can group the rows according to whether the code value is x or not (that is, create a new group whenever the code value is changed into x), and get a random row from the current group. So we still need a calculated column to be used as the grouping key.

The Python script:

import pandas as pd df = pd.read_csv("data.txt") derive = df.code.eq('x').cumsum() res=df[df.code.ne('x')].groupby(derive).apply(lambda x : x.sample(1)) res=res.reset_index(level=0, drop=True) print(res) |

Generate a calculated column Group records by the calculated column and get a random record from each group through the cooperation of apply function and lambda Reset the index

|

Explanation: code.eq(x) returns True when code is x and False when code isn’t x. cumsum() accumulates the number of true values and false values to generate a calculated column [1 1 1 1 1 1 1 1 1 2 2…]. Then the script finds the records where code is x, group records by those x values, and get a random record from each group.

Summary:

In all the above examples, the original data set is divided into a number of subsets according to a specified condition, and has the following two features:

1)No subset is empty;

2)Each member in the original data set belongs to and only belongs to one subset.

We call this type of grouping the full division. There is also partial division.

Here are examples.

X Alignment grouping

Alignment grouping has a base set. It compares an attribute (a field or an expression) of members of the to-be-grouped set with members of the base set and puts members matching a member of the base set into same subset. The number of subsets is the same as the number of members in the base set. The alignment grouping has three features:

1)There may be empty subsets (one or more members of the base set don’t exist in the to-be-grouped set, for instance);

2)There may be members of the to-be-grouped set that are not put into any group (they are not so important as to be included in the base set, for instance);

3)Each member in the to-be-grouped set belongs to one subset at most.

1. Empty subsets

A company wants to know the precise number of employees in each department. If a department doesn’t have male employees or female employees, it records their number as 0.

Problem analysis: If we group data directly by department and gender, which is groupby([‘DEPT’,’GENDER’]), employees in a department that doesn’t have female employees or male employees will all be put into one group and the information of absent gender will be missing. It’s easy to think of an alternative. That solution groups records by department, generates a [male, female] base set to left join with each group, groups each joining result by gender and then count the numbers of male and female employees. This will make sure that each subgroup includes both female employees and male employees.

Python script:

import pandas as pd def align_group(g,l,by): d = pd.DataFrame(l,columns=[by]) m = pd.merge(d,g,on=by,how='left') return m.groupby(by,sort=False) employee = pd.read_csv("Employees.csv") l = ['M','F'] res = employee.groupby('DEPT').apply(lambda x:align_group(x, l, 'GENDER').apply(lambda s:s.EID.count())) print(res) |

Alignment grouping function Generate the base dataframe set and use merge function to perform the alignment grouping

Define a sequence Group records by DEPT, perform alignment grouping on each group, and perform count on EID in each subgroup

|

Explanation:

The user-defined function align_group uses merge() function to generate the base set and perform left join over it and the to-be-grouped set, and then group each joining result set by the merged column. After records are grouped by department, the cooperation of apply() function and the lambda expression performs alignment grouping on each group through a user-defined function, and then count on EID column. (Note: You shouldn’t perform count on GENDER because all GENDER members are retained during the merge operation. When there is an empty subset, the result of count on GENDER will be 1 and the rest of columns will be recorded as null when being left-joined. That will result in a zero result for a count on EID).

2. Members of the to-be-grouped set that are not put into any group

The task is to group records by the specified departments ['Administration', 'HR', 'Marketing', 'Sales'], count their employees and return result in the specified department order.

Problem analysis: We can filter away the records not included by the specified set of departments using left join.

The Python script:

import pandas as pd def align_group(g,l,by): d = pd.DataFrame(l,columns=[by]) m = pd.merge(d,g,on=by,how='left') return m.groupby(by,sort=False) employee = pd.read_csv("Employees.csv") sub_dept = ['Administration', 'HR', 'Marketing', 'Sales'] res = align_group(employee,sub_dept,'DEPT').apply(lambda x:x.EID.count()) print(res) |

Alignment grouping function

The specified subset of departments Use the alignment function to group records and perform count on EID |

Explanation: Pandas doesn’t directly support the alignment grouping functionality, so it’s roundabout to implement it. Besides, the use of merge function results in low performance.

XI Enumeration grouping

An enumeration grouping specifies a set of conditions, computes the conditions by passing each member of the to-be-grouped set as the parameter to them, and puts the record(s) that make a condition true into same subset. The subsets in the result set and the specified condition has a one-to-one relationship. One feature of the enumeration grouping is that a member in the to-be-grouped set can be put into more than one subset.

Here’s an example

The task is to group employees by durations of employment, which are [employment duration <5 years, 5 years <= employment duration <10 years, employment duration >=10 years, employment duration >=15 years], and count female and male employees in each group (List all eligible employee records for each enumerated condition even if they also meet other conditions).

Problem analysis: The enumerated conditions employment duration >=10 years and employment duration >=15 years have overlapping periods. Employees who have stayed in the company for at least 15 years also meet the other condition. A calculated column doesn’t support putting one record in multiple groups. We need to loop through all conditions, search for eligible records for each of them, and then perform the count.

import pandas as pd import datetime def eval_g(dd:dict,ss:str): return eval(ss,dd) emp_file = 'E:\\txt\\employee.txt' emp_info = pd.read_csv(emp_file,sep='\t') employed_list = ['Within five years','Five to ten years','More than ten years','Over fifteen years'] employed_str_list = ["(s<5)","(s>=5) & (s<10)","(s>=10)","(s>=15)"] today = datetime.datetime.today().year arr = pd.to_datetime(emp_info['HIREDATE']) employed = today-arr.dt.year emp_info['EMPLOYED']=employed dd = {'s':emp_info['EMPLOYED']} group_cond = [] for n in range(len(employed_str_list)): emp_g = emp_info.groupby(eval_g(dd,employed_str_list[n])) emp_g_index = [index for index in emp_g.size().index] if True not in emp_g_index: female_emp=0 male_emp=0 else: group = emp_g.get_group(True) sum_emp = len(group) female_emp = len(group[group['GENDER']=='F']) male_emp = sum_emp-female_emp group_cond.append([employed_list[n],male_emp,female_emp]) group_df = pd.DataFrame(group_cond,columns=['EMPLOYED','MALE','FEMALE']) print(group_df) |

The function for converting strings into expressions

Grouping conditions

Calculate employment durations

Loop through grouping conditions Group records by conditions Grouping indexes If there are not eligible records Then the number of female or male employees are 0

If there are records meeting the current condition Then create a group for them And count the female and male employees

Summarize the count results for all conditions |

Explanation: EMPLOYED is a column of employment durations newly calculated from HIREDATE column. The user-defined function eval_g() converts enumerated conditions into expressions. The enumerated condition s<5, for instance, is equivalent to the eval_g(dd,ss) expression emp_info['EMPLOYED']<5. The new calculated column value will then be used to group the records. The script loops through the conditions to divide records into two groups according to the calculated column. get_group(True) gets eligible groups. Finally the script uses concat() function to concatenate all eligible groups.

Summary

Python can handle most of the grouping tasks elegantly. It needs to generate a calculated column that meets the grouping condition when dealing with order-based grouping tasks, such as grouping by changed value/condition. It is a little complicated. It becomes awkward when confronting the alignment grouping an enumeration grouping tasks because it needs to take an extremely roundabout way, such the use of merge operation and multiple grouping. That’s time and effort consuming. Pandas still has its weaknesses in handling grouping tasks.

esProc SPL handles the grouping tasks tactfully. esProc is specialized data computing engine. SPL, the language it is based, provides a wealth of grouping functions to handle grouping computations conveniently with a more consistent code style.

Two esProc grouping functions groups()and group() are used to achieve aggregation by groups and subset handling. They are able to handle the above six simple grouping problems in a concise way:

Problem |

SPL code |

Description |

I |

A.groups(DEPT;count(~):NUM) |

Group data table A by DEPT and perform count() |

II |

A.groups(DEPT,GENDER;avg(SALARY):AVG_SALARY) |

Group by DEPT and GENDER and perform avg() |

III |

A.groups((year(BIRTHDAY)- 1900)\10:years;avg(SALARY):AVG_SALARY) |

Name (year(BIRTHDAY)-1900)\10 years and group records |

IV |

A.groups(DEPT;count(EID):NUM,avg(SALARY):AVG_SAL ARY) |

One aggregate on each of multiple columns |

A.groups(DEPT;count(SALARY):NUM,avg(SALARY):AVG_ SALARY) |

Multiple aggregates on one column |

|

A.groups(DEPT;count(SALARY):NUM,avg(SALARY):AVG_ SALARY,max(age(BIRTHDAY)):MAX_AVG) |

Multiple aggregates over multiple columns |

Python is also convenient in handling them but has a different coding style by involving many other functions, including agg, transform, apply, lambda expression and user-defined functions. SPL takes consistent coding styles in the form of groups(x;y) and group(x).(y).

Python scripts are a little complicated in handling the following three problems by involving calculated columns. The ordered set based SPL is able to maintain an elegant coding style by offering options for handling order-based grouping tasks

Problem |

SPL code |

Description |

VII |

A.groups@n((#-1)\3;y) |

Group data table every three records; parameter y is an aggregate expression |

VIII |

A.groups@o(user,location;y) |

@o option: handle grouping problems by changed value |

IX |

A.group@i(code==”x”).(y) |

@i option: handle grouping problems by changed condition |

You can choose to use groups or group function to handle a grouping and aggregate task according to whether you need a post-grouping aggregation or you want to further manipulate data in each subset.

Python is really awkward in managing the last two types groups tasks, the alignment grouping and the enumeration grouping, through the use of merge function and multiple grouping operation. SPL has specialized alignment grouping function, align(), and enumeration grouping function, enum(), to maintain its elegant coding style.

Problem |

SPL grouping |

Description |

X |

s=[“M”,”F”] A.group(DEPT).(~.align@a(s,GENDER).(y)) |

There may be empty subsets |

s=[“Administration”, “HR”, “Marketing”, “Sales”] A.align@a(s,DEPT).(y) |

There may be members of the to-be-grouped set that are not put into any group |

|

XI |

c=[“?<5”,”?>=5 && ?<10”,”?>=10”,”?>=15”] A.enum(c, EMPLOYED) |

There may be members that are put into more than one subset |

Python’s fatal weakness is the handling of big data grouping (data can’t fit into the memory). The language requires external storage read/write and hash grouping. It’s almost impossible for a non-professional programmer to get it done in Python. Read How Python Handles Big Files to learn more.

That article points out Python problems in computing big data (including big data grouping), and introduces esProc SPL’s cursor mechanism. This mechanism supplies group function and groupx() function to handle big data calculations in an elegant way.

SPL Official Website 👉 http://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc

SPL Learning Material 👉 http://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/ydhVnFH9

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version