Methods for implementing data processing in microservice

A complete microservice includes service management (registration/discovery/logoff), service gateway, service fault tolerance, service communication, service monitoring, service security, deployment, etc. It is often unrealistic to use the native development language to develop from scratch, so the existing microservice development framework is usually used to build when implementing microservices, such as Spring cloud, Dubbo, Istio, etc.

In microservices, data processing is the core, and it is also where the workload is large and the framework itself cannot help. For analytical businesses, data computing is the core of the core. This article will discuss and compare the methods of implementing data computing in microservices based on the microservice development framework (Spring cloud). The comparison points roughly include the following aspects:

· Application difficulty, whether it is convenient to integrate with the microservice framework

· Algorithm implementation difficulty, whether it is simple to implement the algorithm

· Computing power, whether to have strong computing power to complete all computing tasks

· Coupling, whether there is strong coupling between service and service, service and data sources

· Hot deployment, whether the modified algorithm supports non-stop hot switching

· Scalability, whether it can provide scalable computing power

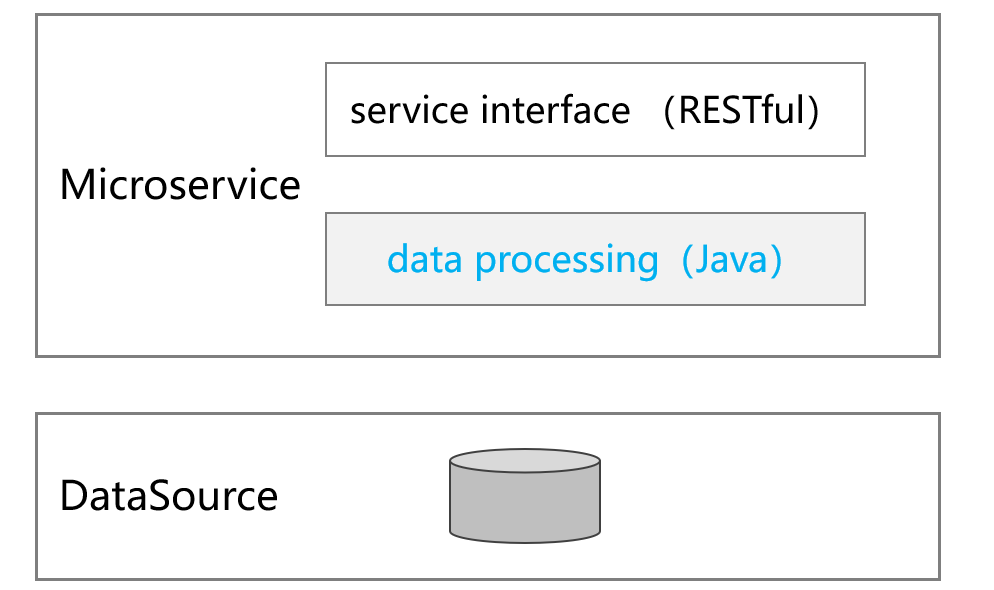

Java

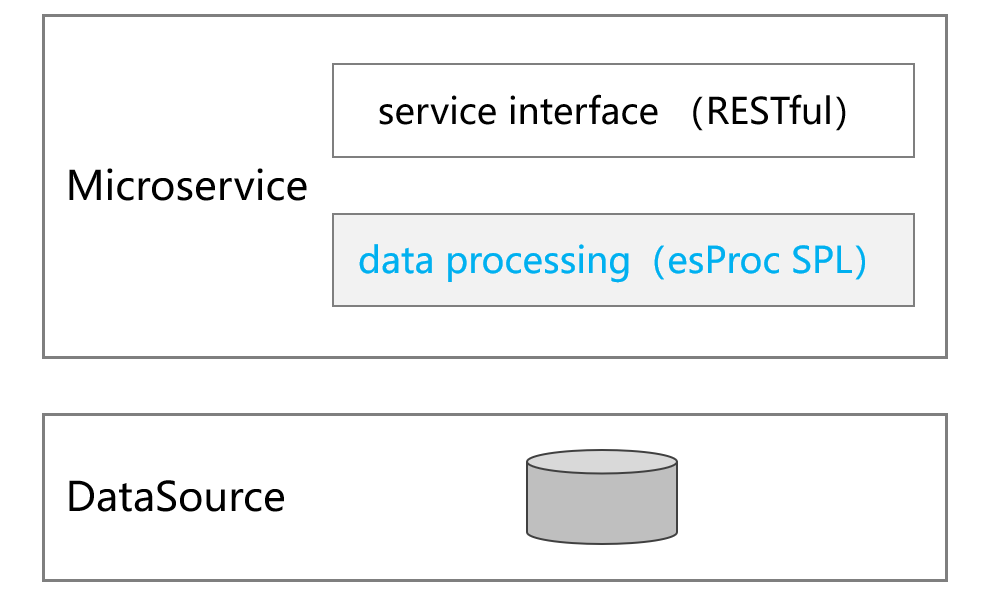

The default development language of most mainstream microservice development frameworks, including Spring cloud, is Java. Using java to implement data processing can be seamlessly combined with the microservice framework, and can be extended as needed. The position in the microservice architecture is as follows:

So what are the options for implementing microservice data processing using Java?

Stream

After Java8, the lazy evaluation set computing library Stream was introduced, which greatly enhanced the capability of Java in set calculations, and the lambda syntax became more concise in calculation expression.

//Filtering

IntStream iStream=IntStream.of(1,3,5,2,3,6);

IntStream r1=iStream.filter(m->m>2);

//Sorting

Stream r2=iStream.boxed().sorted();

However, Stream has a hard time in the calculation of record type, and record is the focus of microservice data processing. For example, sort the client field of the order table in reverse order, and then sort the amount field. The code is as follows:

// Orders is Stream<Order> type,Order is record type, the definition is as follows:

//record Order(int OrderID, String Client, int SellerId, double Amount, Date OrderDate) {}

Stream<Order> result=Orders

.sorted((sAmount1,sAmount2)->Double.compare(sAmount1.Amount,sAmount2.Amount))

.sorted((sClient1,sClient2)->CharSequence.compare(sClient2.Client,sClient1.Client));

You can see that the code is not so concise (much more complex than SQL), and the sorting fields have to be reversed, which is a little annoying. In addition, the data object record in the code will automatically implement the equals function. If entity is used, the function needs to be implemented manually to support sorting calculation, and the code length will increase.

In addition, it is not easy to implement join calculation through stream. The join calculation realized by hard coding is not only lengthy, but also logically complex. Left join and full join should also be hard coded, and the key code logic is different, and it is more difficult to code, which is a challenge for professional Java programmers. The reason is still the lack of professional structured computing class library in Java. Although stream has been enhanced, it is still far from enough.

As a compiled language, Java does not support hot deployment, which is a great challenge to frequently modifying the microservice computing logic.

For more information about using stream for data processing, please refer to Are You Trying to Replace SQL with Java 8 Stream?

Java computing library

In view of the defects of stream, a third-party Java computing library can also be selected to complete data processing in some specific data computing scenarios. There are two choices of Java computing library. One is to make up for the shortcomings of java with the help of SQL; The other is to simplify data processing with objects similar to Python pandas dataframe.

Java computing libraries that provide SQL capabilities include CSVJDBC/XLSJDBC/CDATA Excel JDBC/xlSQL, etc. as can be seen from the names, such tools mainly focus on file computing. However, most of these computing libraries are not mature enough. Taking the relatively mature CSVJDBC as an example, it can be integrated with spring cloud through jar package, and can use SQL to implement calculations. However, CSVJDBC only supports a limited number of calculations, such as conditional query, sorting and group aggregation, while set operations, subquery and join calculation are not supported. Moreover, CSVJDBC can only use CSV as the data source, and other types of data sources can only be calculated after being converted to CSV, which greatly limits the use scenarios.

Java computing libraries of dataframe type include Tablesaw/ Joinery/ Morpheus/ Datavec/ Paleo/ Guava, etc. similar to CSVJDBC, the maturity of such tools is still not high. Taking Tablesaw as an example, it has good integration and can be integrated with Spring cloud through jar package. In terms of calculation, it can complete sorting, group aggregation and join operations, but it does not support complex calculations well. Although it has lambda syntax, it still needs complex coding.

For more details about the Java computing library, please refer to Looking for the Best Java Data Computation Layer Tool

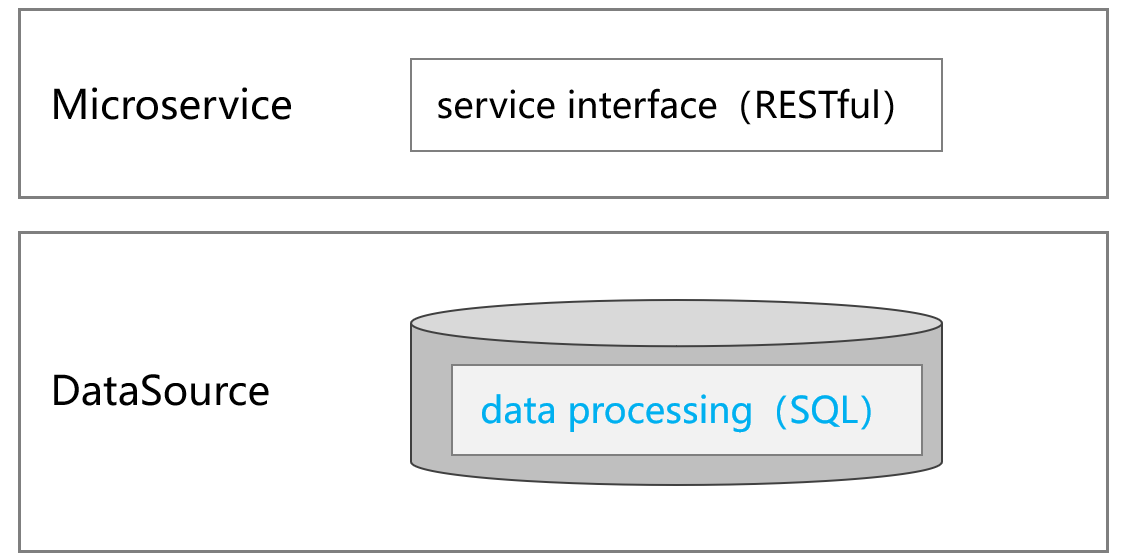

SQL

Compared with using SQL in Java to implement simple and convenient data processing in microservices, using a database that has better support for SQL, especially RDB, will also be an option.

Using SQL to implement calculation is much simpler compared with Java, but the disadvantage of sinking the data processing work into the database is also obvious. First, how to design the database of microservice framework? Do multiple microservices share the same database, or does each microservice correspond to a database? If the sharing scheme is adopted, it is bound to cause the coupling problem between multiple services, and it is very easy to cause a single point of failure and service failure. If the one-to-one scheme is adopted, it will cause the contradiction between data distribution and use.

As we know, microservices are split and designed according to business. For TP (transactional) scenario, distributed transaction itself is a very difficult problem. For AP (analytical) scenarios that focus more on data processing, how to distribute data after the splitting of microservices? After all, the amount of data in analytical scenarios is very large, and the data range involved in business query is very wide. If the full amount of data is stored in each database, the cost of data transmission and maintenance will be very high. If only part of the data is stored, cross service data calls will be involved when the query involves multiple service data. In order to follow the principle of one database per service, other microservices are not allowed to directly access the database of this service, which requires data access through the data access interface encapsulated by the service. Multiple service data are fetched somewhere and then calculated in Java, which faces various problems of data processing in Java.

In practical application, sharing database is rare, because it seriously violates the design principle of microservice, makes microservice meaningless, and has obvious disadvantages. The one-to-one scheme requires great investment in both application difficulty and maintenance cost. Moreover, putting the data processing logic into the database will also lead to the tight coupling between the service and the database, which is not conducive to service expansion and database replacement. Therefore, it is still the mainstream practice to use the database for data persistence storage and put most of the data processing work on the application end (such as using Java).

Although java is closely related to the microservice framework, it is too complex to implement data processing. Although SQL is simple, it is rarely used for data processing in microservice due to database constraints. Are there other technologies that can implement data processing in microservices?

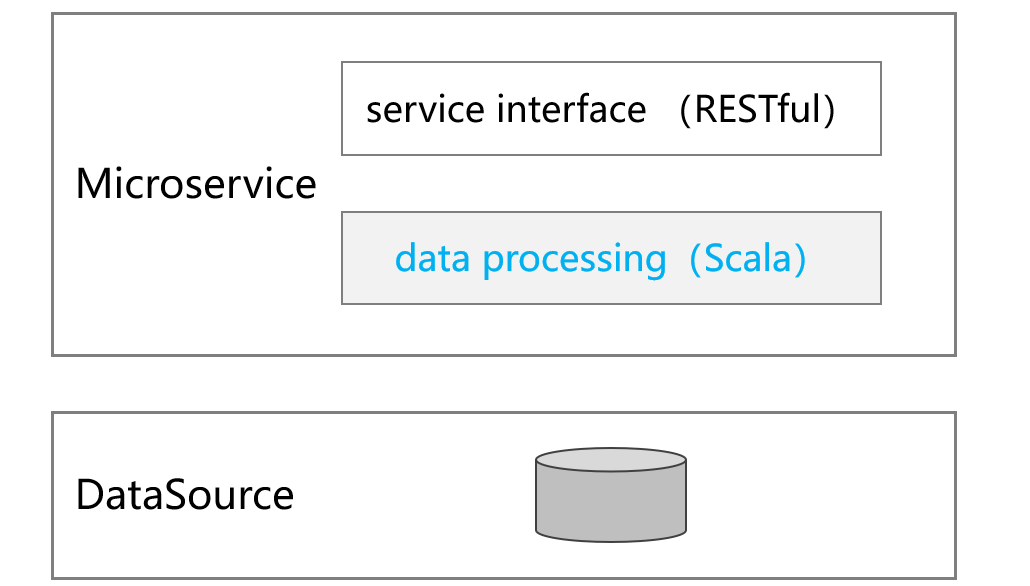

Scala

Scala was originally designed as a general-purpose development language, but what really attracted people's attention was its professional structured data computing capability, including both distributed computing based on spark framework and local computing without framework and service. Scala runs on the JVM and is inherently easy to integrate with Java. We can take advantage of Scala and embed it into the microservice framework to provide data processing capability.

Let’s take a look at the code of data processing in Scala. Generally speaking, it is relatively simple.

//Conditional query

val condtion=Orders.where("Amount>1000 and Amount<=3000 and Client like'%S%' ")

//Sorting

val orderBy=Orders.sort(asc("Client"),desc("Amount"))

//Group aggregation

val groupBy=Orders.groupBy(year(Orders("OrderDate"))).agg(sum("Amount"))

//Join

val Employees = spark.read.option("header", "true").option("sep","\\t")

.option("inferSchema", "true")

.csv("D:/data/Employees.txt")

val join=Orders.join(Employees,Orders("SellerId")===Employees("EId"),"Inner")

.select("OrderID","Client","SellerId","Amount","OrderDate","Name","Gender","Dept")

As a compiled language, Scala does not support hot deployment, which is similar to Java. In addition, Scala has a high learning threshold, which may be one of the reasons why it has been relatively unpopular.

Kotlin

Like Scala, Kotlin is a compiled development language that can run on the JVM. Kotlin can be combined with microservice frameworks such as Spring cloud to complete data processing tasks. Unlike Scala, many IDEs have built-in Kotlin option. When building a spring cloud project, you can choose Kotlin without introducing it manually.

In terms of calculation expression, Kotlin can be considered as an enhanced stream, which simplifies the lambda syntax, adds the set calculation of eager evaluation, and supplements many set functions.

However, when facing complex structured data computing, Kotlin's performance has not improved significantly compared with stream.

For example, sort the client field of the order table in reverse order, and sort the amount field:

//Orders is List<Order> type,Order definition is as follows:

//data class Order(var OrderID: Int,var Client: String,var SellerId: Int, var Amount: Double, var OrderDate: Date)

var resutl=Orders.sortedBy{it.Amount}.sortedByDescending{it.Client}

Multi field group aggregation, that is, grouping by year and client, sum the amount in each group and count:

data class Grp(var OrderYear:Int,var SellerId:Int)

data class Agg(var sumAmount: Double,var rowCount:Int)

var result=Orders.groupingBy{Grp(it.OrderDate.year+1900,it.SellerId)}

.fold(Agg(0.0,0),{

acc, elem->Agg(acc.sumAmount + elem.Amount,acc.rowCount+1)

})

.toSortedMap(compareBy<Grp> { it. OrderYear}.thenBy {it. SellerId})

result.forEach{println("group fields:${it.key.OrderYear}\\t${it.key.SellerId}\\t aggregate fields:${it.value.sumAmount}\\t${it.value.rowCount}") }

In single field group aggregation, you can use the key in map to store the grouping field (similar to value). You can’t do this in multi field group aggregation because multiple fields cannot be placed in the key. In this case, you can define a structured data object Grp, spell multiple grouping fields into this object, and then use the key to store Grp. Because Kotlin is based on Stream, it also does not support dynamic data structure. The data structure of the result must be defined before calculation, which is particularly complex.

In addition, Kotlin is unable to implement join calculation, and it is unable to hot switch. As a data processing tool for microservices, its application effect is not ideal.

For more information about Kotlin's data processing capabilities, please refer to Are You Trying to Replace SQL with Kotlin? .

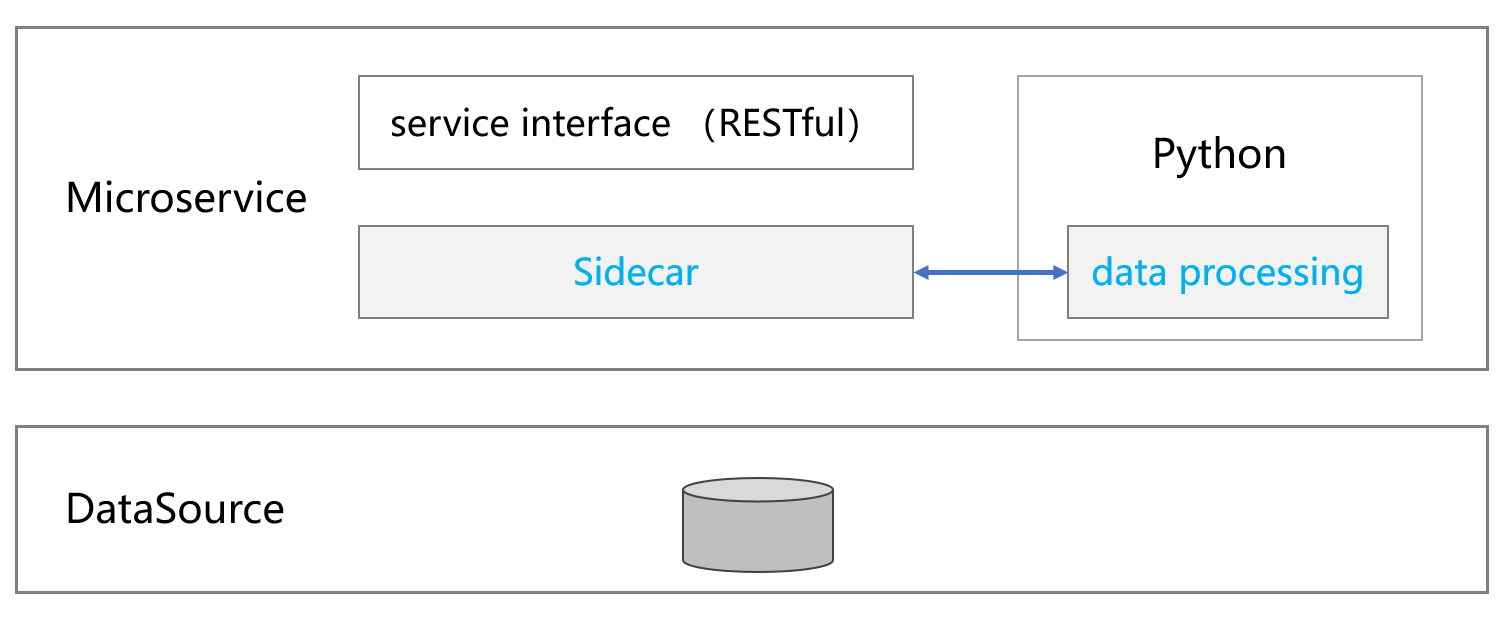

Python

In terms of data processing, Python has to be mentioned. Its many computing class libraries greatly facilitate all kinds of data processing scenarios, and pandas is a leader in structured data processing. However, Python is independent of the Java system and cannot be directly embedded into the spring cloud to provide data processing capabilities. In this case, it is necessary to integrate Python computing services with the help of the third-party language integration interface (sidecar) provided by the spring cloud. In short, sidecar is a tool provided by spring cloud. It is used to integrate the REST interface of a third party into Spring cloud, so as to realize the two-way communication between Java and Python.

It is convenient for pandas to implement most calculations, such as join calculation. Join in Python is to connect two tables according to one or some fields to form a wide table, which is similar to SQL, but it can be calculated step by step because it supports step-by-step calculation, and the code is easy to understand

However, there are some problems with Python's handling of foreign key association:

1. Python's merge function can only resolve one association relationship at a time, which is troublesome when there are many associations.

2. After each association is parsed, a new table is obtained, and the data will be copied, which is time-consuming and memory-consuming; This problem also exists when associated with a dimension table.

3. When self-join (circular association) occurs, its essence is still that two tables are associated with getting a new table, and the established association relationship cannot be reused.

For the shortcomings of pandas in structured data computing, please refer to structured data calculations that pandas is not good at.

In addition, the engineering problems of using Python to complete the microservice data computing cannot be ignored. Although the interworking can be carried out through sidecar, it is far from the direct solution of Java Ecology (whether native or embedded), and the maintenance cost is also high.

esProc SPL

esProc SPL is a professional open source data computing engine, which can be regarded as an enhanced Java computing library and supports seamless integration with spring cloud to realize microservice data processing tasks. During integration, you only need to import the corresponding jar packages of esProc. Please refer to spring cloud integrate SPL to implement microservices for specific integration steps.

In terms of data sources, esProc supports a variety of data source types, such as RDB, NoSQL, JSON, CSV, WebService, etc., which is much better than the general Java computing libraries.

In the implementation of data processing algorithm, esProc has its own SPL syntax. The following example can show the simplicity of SPL.

According to the stock record table, query the stocks whose share price has risen for more than 5 consecutive days and the days of rising (if the share price is equal, it is regarded as rising).

A |

||

1 |

=connect@l("orcl").query@x("select * from stock_record order by ddate") |

|

2 |

=A1.group(code) |

|

3 |

=A2.new(code,~.group@i(price<price[-1]).max(~.len())-1:maxrisedays) |

Calculate the max continuous rising days of each stock |

4 |

=A3.select(maxrisedays>=5) |

Select qualified records |

Even if SQL is used to calculate this task, three levels of subqueries must be nested to realize it (interested readers can have a try), not to mention Java (stream, including kotlin). SPL supports step-by-step calculation, which is better than SQL.

It is worth mentioning that SPL is interpreted executed, supports hot switching, and the calculation script modification does not need to restart the service, which is unmatched by other compiled languages.

In addition, SPL has complete computing capabilities and can complete all data processing tasks. For more information about SPL, please refer to SPL learning materials.

In general, when using java microservice frameworks such as spring cloud to implement data processing, the implementation complexity of stream and Kotlin is the highest, which is caused by the lack of structured computing class library in Java. Although it is getting better, it is still far from enough at present; Java computing libraries are often not mature enough; Due to the limitation of database in microservice, SQL is difficult to undertake data processing tasks; Scala itself is difficult to use; Although Python has rich computing class libraries, many operations are not easy to implement, and the integration with Java framework requires high engineering cost. esProc SPL combines almost all the advantages of other implementation methods and has the characteristics of all-in-one. Compared with SQL, SPL supports step-by-step calculation, which is simpler to realize complex calculation; Compared with Java, it provides a rich computing class library, and the algorithm is more concise; It is simpler than Scala; Compared with Python, in addition to its concise syntax, it can be seamlessly embedded with the microservice framework and is easier to use. Therefore, SPL is ideal for data processing of microservices.

SPL Official Website 👉 http://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc

SPL Learning Material 👉 http://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/ydhVnFH9

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version