Open-source SPL optimizes batch operating of insurance company from 2 hours to 17 minutes

Abstract

The batch operating task for associating new insurance policies with historical policies is very time-consuming, click to learn: Open-source SPL optimizes batch operating of insurance company from 2 hours to 17 minutes

Problem description

In the vehicle insurance business of insurance company P, it needs to associate new policies with the historical policies of previous years, which is called the historical policy association task in the batch operating. When reminding regular customers to renew their policies, it needs to calculate previous years’ policies in the specified time period. For example, the provincial-level companies need to regularly calculate the historical policies corresponding to the renewable policies in a specific month. Currently such batch data processing task is implemented in stored procedures, which has the disadvantages of poor performance and long running time, and the running time increases in direct proportion to the number of days of new policies. When calculating the association between the historical policies and 10-day new policies, it will take 47 minutes; and will take 112 minutes, nearly 2 hours, in the case of 30-day new policies; if the time span is larger, the running time will be unbearably long, and it will basically become an impossible task.

Analysis and solution

Step 1, understand the characteristics of calculation task. The stored procedure of batch operating is very long, with more than 2,000 lines. The data tables to be used include the policy table and the policy-vehicle detail table. For larger provinces, both the two tables have tens of millions of data amount, and the incremental data of new policies is 10,000 to 20,000 every day. The structure of two tables is roughly as follows (for desensitization reason, all actual field names are replaced by xxxx, and only comments are kept):

Policy table

xxxx char(xx) not null ,--Policy code (primary key)

xxxx char(xx),--Policy No.

xxxx datetime year to second,--Start date

xxxx datetime year to second--End date

Policy-vehicle detail table

xxxx char(22) not null , --Policy code (primary key)

xxxx decimal(x,x) not null ,--Detail code (primary key)

xxxx varchar(xx),--License plate code

xxxx char(x),--Type of license plate

xxxx varchar(xx),--vin code

xxxx varchar(xx)--Vehicle frame code

Comparison table of old and new policies:

xxxx char(xx) not null , --Policy code

xxxx char(xx) --Policy code of previous year

The input parameters for calculation of previous years' policies are the start date (istart) and the end date (iend), and the calculation target is the comparison table of the old and new policies. It will be discarded if the corresponding old policy cannot be found.

The calculation process is roughly as follows:

1. From the policy table, find the new policies with a start date in the specified time period (between istart and iend).

2. Associate previous year’s history policies with new policies. Association conditions: the same vin code; or the same vehicle frame code; or the license plate type and license plate code are the same at the same time. Also, discard the data where old policy No. is null or empty string, and discard the same data from the old and new policies.

3. In all old policies, find policies whose end date is within 90-day time difference from new policies, the found policies are previous year’s policies.

Second step, analyze the performance bottleneck. After many tests, we found that the key to the performance optimization of the original stored procedure depends on four association calculations. The first calculation is to associate the new policy table with the policy-vehicle detail table through the policy code to obtain the vehicle information; and the remaining three calculations are to associate the policy-vehicle detail table respectively through the vin code, frame number, and license plate number (license plate type) to get the historical policies. For more than 100,000 new policies in ten days, the time for these four associations is tolerable; while for over 400,000 new policies in a month, the calculation time will take 1 to 2 hours.

Step 3, design the optimization scheme. The key point of performance optimization is to solve the bottleneck “four association (JOIN) operations”. In relational databases, the HASH algorithm is generally used to perform JOIN, and its complexity is SUM(Ni*Mi). If the data of two large historical policy tables (policy and policy details) are sorted by the associated field (policy code) in advance, the ordered merging algorithm with lower-complexity (M+N) can be used to improve the performance of JOIN. Moreover, the new policies are less in number, and can all be read into memory for repeated use. After the historical policy table with huge amount of data is merged in an orderly manner, the remaining three associations with the new policy table can be performed in the same traversal, hereby achieving multipurpose traversal, reducing the times of traversal, and effectively speeding up. The advantage of doing so is that the calculation time will not be significantly increased, whether it is ten-day or one-month new policies.

Step 4, design the implementation scheme. Since the relational databases are based on the theory of unordered set, there is no way to save ordered results in advance. Moreover, the multipurpose traversal statements cannot be written in SQL. Therefore, the above optimization scheme cannot be implemented in the database, and it needs to export the data to a file for self-processing. The association of historical policies is a typical batch operating task, and the data is imported into the batch operating database from the production database every day. Since the data was meant to be moved, we can export them to the file during the moving process to implement the high-performance algorithms described above. Moreover, the file system is better in terms of IO performance, thus exporting to file will be faster than exporting to database; storing the data externally is also feasible in practice.

Step 5, export the data. Export the policy table and the policy-vehicle detail table from the database, and sort by policy code, and store them in the high-performance file. The sorting here is very important and is the prerequisite for the implementation of subsequent ordered merging algorithm. Due to the poor performance of database JDBC, the speed will be relatively slow when exporting all historical data for the first time. Since then, however, new data will be exported every day, and the incremental update of high-performance storage file will be very fast.

Step 6, write new algorithm code to implement performance optimization. The number of new policies is 10,000 to 20,000 in one day, and 300,000 to 600,000 in 30 days. For the data of such order of magnitude, they can be directly stored into the memory, and then perform three association calculations to the historical policies to obtain the comparison table of the old and new policies.

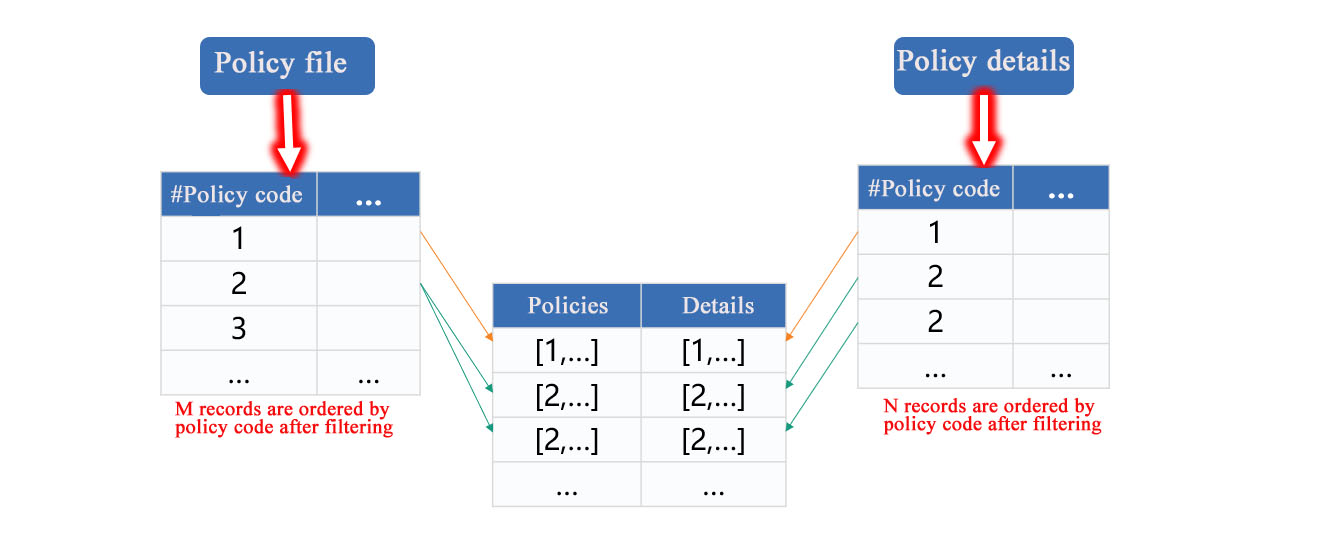

Associate two cursors with the policy code. As mentioned earlier, both files have been sorted by policy code, and hence the association here will be performed by ordered merging algorithm, and each table only needs to be traversed once, as shown in the following figure:

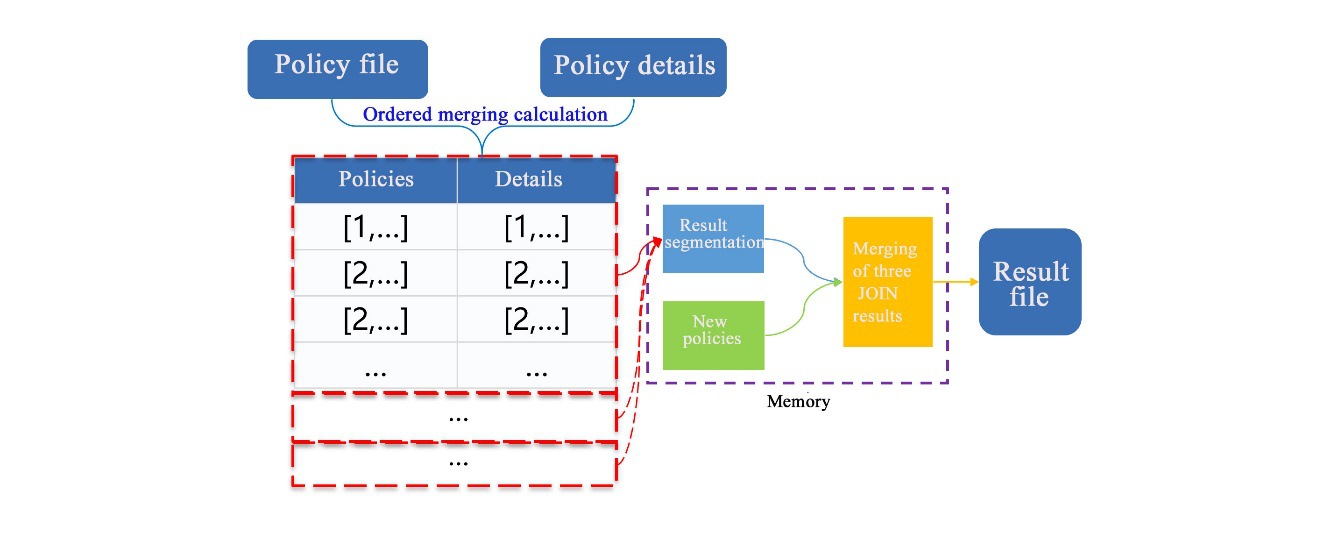

After the two files are associated, they will be traversed in a loop. Each time a part of the data is taken out, such data will be successively associated with new policies in the memory through the vin code, frame number, and license plate number (type of license plate). After the results are merged, the new data file will be generated. See the figure below:

As shown in the figure, one traversal implements four association calculations, and there is no need to traverse four times, and also no need to generate multiple intermediate results. As a result, the number of traversals is reduced, and the time to read and write to the hard disk is reduced, and the performance is significantly improved.

Actual effect

After the optimization scheme is drawn up according to the calculation characteristics, it needs to select an appropriate tool to implement the performance optimization. SQL has been excluded during analysis. If the high-level languages such as Java or C++ was used, it could implement this algorithm; however, the amount of coding is too large and the implementation period is too long, as a result, it is prone to hidden trouble with code errors, and it is also difficult to debug and maintain. The open-source esProc SPL provides support for all the above algorithms, including mechanisms such as the high-performance file, file cursor, ordered merging & segmented data-fetching, in-memory association and multipurpose traversal, which allows us to quickly implement this personalized calculation with less amount of code.

We completed the verification of performance optimization after a few days of programming, debugging and testing, the results showed that the performance was improved obviously. Before optimization, it takes 47 minutes to do the batch operating with database stored procedure when calculating the association between historical policies and 10-day new policies. After optimization, it takes 13 minutes. In the case of 30-day new policies, it takes 2 hours before optimization, and it only takes 17 minutes after optimization, increasing the speed by nearly 7 times. Moreover, it can be seen that the execution time of new algorithm does not increase greatly, and does not increase in direct proportion to the number of days of new policies just like stored procedure.

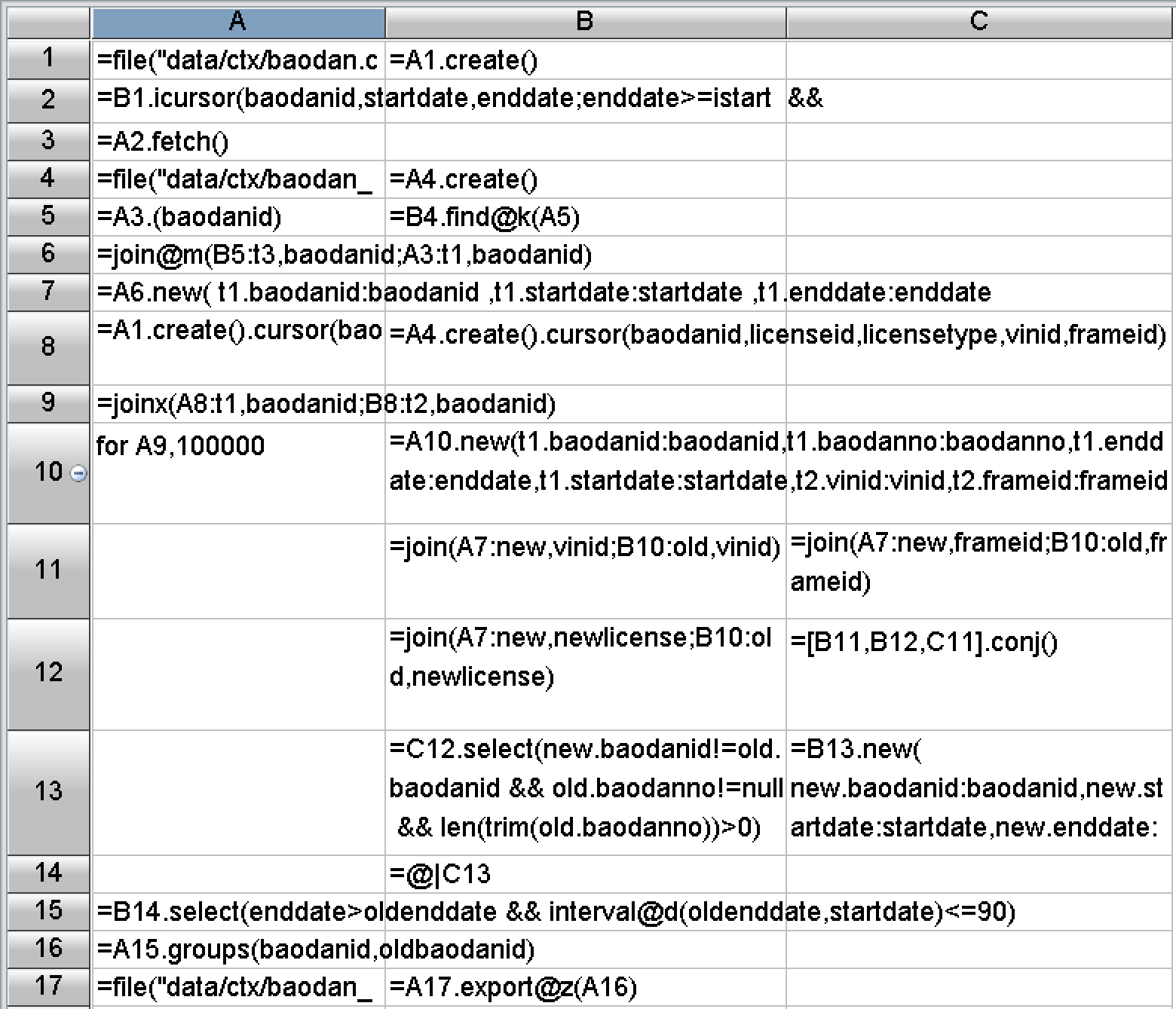

In terms of programming difficulty, SPL has made a lot of encapsulations, provided rich functions, and built-in the basic algorithms and storage mechanisms required by the above optimization scheme. Therefore, the actual code is very short and the development efficiency is very high. The code of four association calculations for the above batch operating is shown as below:

Viewing from the total amount of code, there are 2000 lines of code in original stored procedure, and still over 1800 lines after removing the comments; in contrast, coding in SPL takes up less than 500 cells, which is less than 1/3 of the original.

Postscript

To solve the performance optimization problem, the most important thing is to design a high-performance computing scheme to effectively reduce the computational complexity, thereby ultimately increasing the speed. Therefore, we should not only fully understand the characteristics of calculation and data, but also have an intimate knowledge of common high-performance algorithms, only in this way can we design a reasonable optimization scheme according to local conditions. The basic high-performance algorithms used herein can all be found at: Performance Optimization - Preface, where you can find what you are interested in.

Unfortunately, the current mainstream big data systems in the industry are still based on relational databases. Whether it is the traditional MPP or HADOOP system, or some new technologies, they are all trying to make the programming interface closer to SQL. Being compatible with SQL does make it easier for users to get started. However, SQL, subject to theoretical limitations, cannot implement most high-performance algorithms, and can only face helplessly without any way to improve as hardware resources are wasted. Therefore, SQL should not be the future of big data computing.

After the optimization scheme is obtained, we also need to use a good programming language to efficiently implement the algorithms. Although the common high-level programming languages can implement most optimization algorithms, the code is too long and the development efficiency is too low, which will seriously affect the maintainability of the program. In this case, SPL is a good choice, because it has enough basic algorithms, and its code is very concise, in addition, SPL also provides a friendly visual debugging mechanism, which can effectively improve development efficiency and reduce maintenance cost.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/2bkGwqTj

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version