SPL Programming - 11.4 [Big data] Big cursor

We haven’t learned the sorting of big data yet.

Sorting operation is very different. Its calculation result is as large as the source data. If the source data cannot be loaded in memory, the result cannot be loaded for sure, but unlike select()and new(), it cannot be calculated while traversing.

The sorting of big data can only be solved by using external storage as buffer. The general steps are as follows: read in some data, sort in memory and write out the result; Then read the next part, sort and write; …; Finally, a batch of ordered intermediate results will be obtained, and then the merging algorithm will be used to concatenate these intermediate results. This process is complex and beyond the design scope of this book, and we won’t talk about the detailed principle here.

SPL provides the sortx()function to perform big data sorting. This function implements all the above series of processes. Because the sorting result is still big data, sortx() will also return a cursor, but it is not a deferred cursor. sortx() will calculate immediately, and the returned cursor is based on the intermediate results described above.

For example, we want to arrange the order data according to the average unit price from large to small, and then write it to another file:

| A | |

|---|---|

| 1 | =file(“data.txt”).cursor@t() |

| 2 | =A1.sortx(-amount/quantity) |

| 3 | =file(“data.csv”).export@ct(A2) |

Because sorting is from large to small, sortx()uses negative number as the parameter. A2 after calculation is a cursor, which cannot be viewed directly. You can get data with fetch() or write to another file like A3.

sortx()calculates immediately. When it returns after execution, cursor A1 has been traversed. At this time, even deleting data.txt will not affect the action of A3. This is completely different from functions like select(). The deferred cursor returned by the select() function will be traversed synchronously with its basis cursor in the future.

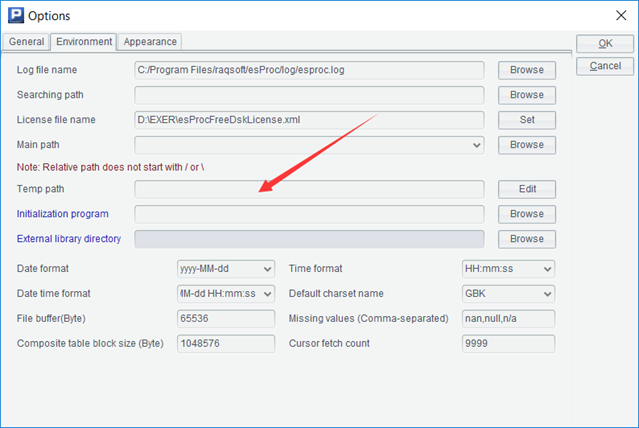

As a reminder, sortx()may be slow and need to wait for some time after running. It also takes up some hard disk space (used to store intermediate results). esProc will use the operating system temporary directory by default, or you can set the temporary directory yourself in the options.

In fact, sorting for big data itself is not commonly used. We usually don’t care about the full sorting result of big data, but only the top N. However, sorting is often the basis of other big data operations. Without an orderly result, other operations are difficult to do.

For example, big grouping.

For the groups()function we used before, the returned result is a table sequence, that is, small data. However, there is still some kind of group aggregation operation, and its return result will be so huge that the memory can’t fit. At this time, there is no way to use groups(), which will lead to memory overflow.

However, as we said earlier, if the data is in order to the grouping key values, we can use the method of group() function, to summarize at the same time during the traversal process, and there will be no overflow. No matter how large the returned grouping result is, it can be calculated normally.

For example, we want to do a grouping by two fields amount and quantity to see how many orders there are in each group with different amount and quantity. The grouping result may be 1 million lines (it’s not big, but we just regard it as big. Suppose it can’t be done with groups()).

The current data has no order for the combination of these two fields. After sorting, we can use the group() function.

| A | |

|---|---|

| 1 | =file(“data.txt”).cursor@t() |

| 2 | =A1.sortx(amount,quantity) |

| 3 | =A2.group(amount,quantity) |

| 4 | =A3.new(amount,quantity,~.count(1):C) |

| 5 | =A3.fetch(100) |

In this way, we can calculate out the big grouping, but because the result is large, we can only fetch() a part to view or write to another file.

We can do other calculations based on it. For example, we want to calculate the total amount and quantity for groups with more than 5 orders of the same amount and quantity (that is, the summary field C>5 in A4).

| A | |

|---|---|

| 1 | =file(“data.txt”).cursor@t() |

| 2 | =A1.sortx(amount,quantity) |

| 3 | =A2.group(amount,quantity) |

| 4 | =A3.new(amount,quantity,~.count(1):C) |

| 5 | =A4.select(C>5).total(sum(C*amount),sum(C*quantity)) |

The group()function returns a deferred cursor. We can attach another select() operation, and then traverse it with total().

Because big grouping is commonly used, SPL provides a groupx() function, which does sorting and grouping together. The above code can be simplified as:

| A | |

|---|---|

| 1 | =file(“data.txt”).cursor@t() |

| 2 | =A1.groupx(amount,quantity;count(1):C) |

| 3 | =A2.select(C>5).total(sum(C*amount),sum(C*quantity)) |

The parameter rules of groupx()are the same as those of groups(), but it assumes that the returned result set is large, so it will sort first (actually it is more complex), then do an ordered grouping, and finally return a cursor. Like sortx(), this is not a deferred cursor. When the two actions are executed together, not only the syntax is simpler, but also the execution speed is better. However, like sortx(), it will temporarily occupy hard disk space.

In addition to the big grouping, there is also the big merging calculation.

Similar to the previous example of merging calculation, if we have two large order files on hand, we need to merge them (or calculate the intersection), and the data must be orderly in advance.

| A | B | |

|---|---|---|

| 1 | =T@c(“data1.txt”) | =T@c(“data2.txt”) |

| 2 | =[A1,B1].mergex@u(id) | >T@c(“all.csv”,A2) |

Assuming that the data is ordered by id, we can directly use mergex()to calculate union. The parameters here are the same as those of merge(), but generally we will not establish a primary key on the cursor, so we need to write the association key used in the merge operation.

Because the result set may be large, mergex()also returns a cursor, but it is a deferred cursor and will not traverse automatically. Unlike select() function, mergex()may generate a deferred cursor for multiple basis cursors. After traversing it, it will also traverse all the basis cursors. The previously mentioned select() and other functions only have one basis cursor.

The T() function uses the @c option to generate a cursor, and it will automatically select the opening method according to the file extension. It can also be used to write out, @c means to write out the cursor, and the write out method will also be selected according to the file extension.

Unlike the in-memory merge()function, mergex() requires that the cursor data must be in order to work, and there is no @o option.

After sorting, the operation that can only be realized by random access can be changed into sequential access. Another similar case is the join operation. Finding associated records with associated key values originally requires random access (searching in the data table), but if the data is in order with associated key, sequential access can achieve the goal.

SPL also provides a joinx() function with x to solve the big join.

| A | B | |

|---|---|---|

| 1 | =T@c(“data1.txt”) | =T@c(“data2.txt”) |

| 2 | =joinx(A1:a,id;B1:b,id) | =A2.fetch(100) |

joinx()can support the homo dimensional association and primary sub association. It also returns a deferred cursor. The form of the table sequence fetched from it is similar to that of the join() in memory. The value of each field is the record in each associated cursor, and then you can continue to use the new() function to realize further reference calculation.

Similarly, joinx()supports left join and full join with @1 and @f. We won’t give detailed examples here. You can generate two data tables with different structures to try to do a big join, and you need to ensure that the association key is orderly, or you should sort with sortx() first.

You may find that there is another situation we have not mentioned, that is, in the case of foreign key association, the dimension table is too large to be loaded into memory. When we talked about switch()and join() related to foreign key on the cursor, the fact table data comes from the cursor, but the dimension table is still a table sequence in memory.

In this case, at present, foreign key can only be understood as join operation and then implemented by joinx(). If the data is disordered to the foreign key of the fact table and the primary key of the dimension table in advance, it needs to be sorted first. Moreover, each joinx() can only resolve one foreign key association, and multiple foreign keys have to do joinx()multiple times. While the switch() and join() for the in-memory dimension table can resolve multiple foreign keys in one traversal.

Unlike select(), groupx() and other functions that only work for one cursor, mergex and joinx work for multiple cursors. So, is it possible to merge and join big data and small data together?

It does exist. The merging and join operations of big data and small data are also big data, and the method of big data should be used. In this case, the small data in memory, that is, the table sequence, should be converted into a cursor, and then the cursor method should be used for operation. SPL provides cursor() function to convert the table sequence or record sequence into a cursor, so that small in-memory data can be simulated into large data and operated together with a file cursor. There are no more examples here. You can refer to relevant help documents when you need them.

We have now learned to use cursor to implement big data operations, but we can only do it right. Another important goal of big data computing is to do it fast, which is also a very complex task, or even a more complex task. Like the traversal reuse technology mentioned earlier, these contents are far beyond the design scope of this book. Here, we just give you an impression. The content of how to improve the performance of big data operation is enough to write another book of the same length. Many calculation methods need to be redesigned, especially to find ways to avoid substantive sorting, because sorting is a very slow action.

In the conventional programming languages for structured data processing, SQL can deal with big data. SQL even achieves data size transparency, that is, users do not need to care about the size of data, which obviously saves people a lot of troubles. This is a huge advantage of SQL. However, using SQL usually needs to load the data into the database, which is too complex and beyond the ability of most non professional programmers.

esProc provides the function of using basic SQL for files and can support most big data operations, so as to avoid the trouble of loading data into the database.

Python is also often used for structured data calculation, but it has no cursor object, and it is very troublesome when processing big data. It is equivalent to implementing the cursor action by yourself, which is basically an impossible task for non professionals.

SPL Programming - Preface

SPL Programming - 11.3 [Big data] Ordered cursor

SPL Programming - 12.1 [Drawing graphics] Canvas and elements

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc_SPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/cFTcUNs7

Youtube 👉 https://www.youtube.com/@esProc_SPL