Which Is the Best Technique for Separating Cold Data from Hot Data?

As business expands, the database or the data warehouse for an OLAP application stores more and more data, and their workload increases. This results in slower response times. Scaling up or scaling out the database is not enough to solve the problem because both are not only expensive but can hardly push the performance up once the database capacity reaches the limit.

Separating cold data from hot data is a better solution. It stores and computes the small amount of frequently accessed hot data and the large amount of seldom accessed cold data separately in order to reduce database workload and increase the response times for queries.

The conventional approach of separating cold data from hot data is to add a front-end database to specifically store hot data and supply computing abilities for front-end applications. But, as the real-world data analysis tasks are unpredictable, it is probably that users are faced with the demand of cross-database mixed computing that involves the front-end database and the original database of different types. The DBMS, however, provides very limited abilities for implementing cross-database computations and generates poor performance. The support for computations between different types of databases can only be more unsatisfactory.

Another problem is that it is hard to achieve data routing using the front-end database. Data routing is the way of checking whether data is cold or hot according to specific rules in order to determine which data source it comes from or, to take a further step, retrieve data from different sources for performing a mixed computation. It is extremely difficult to implement the routing rules in database while keeping it transparent to the front-end application.

It is also infeasible to achieve the mixed computation and routing outside the database by hardcoding them in a high-level language, such as Java, because the coding is difficult, the workload is heavy and the whole process is cost-ineffective.

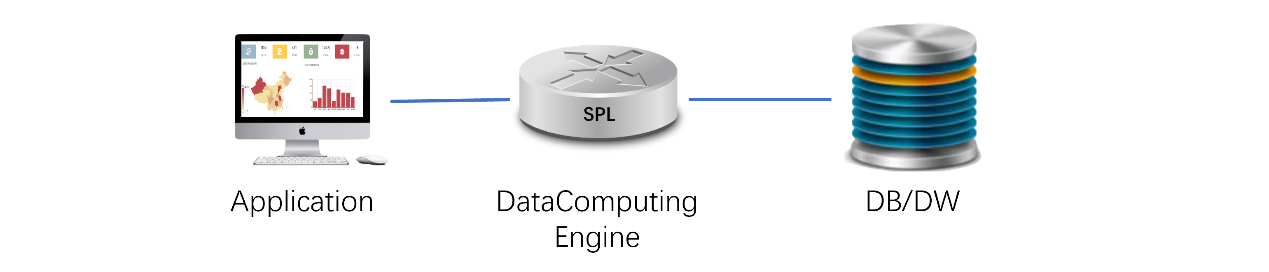

The most suitable tool for separating code data from hot data is the open-source esProc SPL. The approach is to insert esProc between the front-end application and the database to create a data computing engine, as shown below:

SPL’s computational capabilities are open. It can retrieve data from both local files and various databases of different types and then process it with the uniform syntax, making it easy to achieve mixed computing between cold and hot data.

SPL achieves the complex data routing rules using concise code. For a scenario where data is divided into cold or hot by different distances from a specified time point, SPL has the analytic function to split the SQL statement submitted by the front-end application, get the date parameter in the WHERE sub-statement, find which type of data, the recently hot data or the historical cold data or both, the query involves, and retrieve the desired data from involved data source(s) for computation.

SPL supports compressed columnar storage and encapsulates various high-performance algorithms, enabling convenient multithreaded processing, giving full play to the hardware’s computing ability, and thus pushing the performance up to, even above, the level of professional databases. SPL also can handle the small amount of most recently hot data in memory to achieve the highest response times. Moreover, SPL is a lightweight computing engine, which can be deployed on a single machine or even directly embedded into the front-end application when the size of hot data is relatively small, using much fewer system resources than databases.

SPL provides general JDBC driver and HTTP access method to be invoked by front-end applications. SPL supports common SQL syntax specifically for BI applications at the front-end to connect to it seamlessly without being remoulded, and can translate SQL statements to the dialect of the target database when it accesses the cold data.

Below is the sample SPL code of parsing a SQL statement and achieve data routing for getting cold and hot data (according to distances from the specified time point):

A |

B |

C |

|

1 |

=sql="select id,... from T where tdate=date('2020-07-18') and ..." |

||

2 |

=sql.sqlparse@w().split(" ") |

=A2.select@1(like(~,"tdate=date(‘????-??-??’)")) |

|

3 |

=int(mid(right(B2,14),3,4)) |

||

4 |

if A3>=2020 |

=connect() |

=replace(sql,"from T","from T.ctx") |

5 |

return B4.cursor@x(C4) |

||

6 |

else |

=connect("orcl") |

=sql.sqltranslate("ORACLE") |

7 |

return B6.cursor@x(C6) |

||

A1: The SQL statement submitted by the front-end application is passed in as a parameter.

A2-A3: Split the SQL statement to get the date parameter in WHERE clause and to compute the year.

A4-B5: If the year is after 2020 (which means hot data), use the cached hot data in the local to compute SQL and return result set.

A6-B7: If the year is before the year 2020 (which means cold data), connect to oracle database, translate the SQL statement to Oracle SQL, submit and execute it, and return result set.

Below is the sample SPL code of achieving mixed computation of cold and hot data:

A |

|

1 |

=cold=orcl.cursor("select customerid,sum(amt) totalamt from T where tdate>= to_date('2019-11-11','yyyy-MM-dd') and tdate<to_date('2020-01-01','yyyy-MM-dd') and … group by customerid") |

2 |

=hot=local.cursor("select customerid,sum(amt) totalamt from T where tdate>= date('2020-01-01') group by customerid") |

3 |

=[cold,hot].conjx() |

4 |

=A3.groups(customerid;sum(totalamt):totalamt) |

A1-A2: Retrieve the cold data and hot data grouped by customer id respectively from the database/data warehouse and the local.

A3-A4: Concatenate cursors of cold data and hot data, and group and summarize the data.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/2bkGwqTj

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version