SPL: Accessing ORC and Parquet

Both Parquet and ORC are columnar storage file formats of Hadoop, which offers Java API to read files of both formats. esProc encapsulates the Java code for implementing the file read to create easy-to-use SPL functions. These functions enable users to load Parquet/ORC files directly to esProc for computations. In this essay, we’ll take a close look at how you can use these functions to read and handle ORC/Paquet data.

Create/Close Hive connection

Similar to database connection, SPL also uses the “Create/Close” pair to connect to Hive. hive_open(hdfsUrl,thriftUrl,dbName,hdfsUserName) Parameter hdfsUrl is address of HDFS server, parameter thriftUrl is URI of Hive metadata, parameter dbName is name of a Hive database, and parameter hdfsUserName is HDFS username.

hive_close(conn) Parameter conn is the to-be-closed Hive connection.

Example: A1 creates a connection. The middle steps perform file read and write, and certain operations. A3 closes the connection created in A1.

| A | B | |

| 1 | =hive_open("hdfs://192.168.0.76:9000", "thrift://192.168.0.76:9083","default","hive") | |

| 2 | …… | |

| 3 | >hive_close(A1) |

Get database list

hive_db(conn) Get a list of databases in HIVE.

| A | B | |

| 1 | =hive_open("hdfs://192.168.0.76:9000", "thrift://192.168.0.76:9083","default","hive") | |

| 2 | =hive_db(A1) | |

| 3 | >hive_close(A1) |

The code returns the following data:

| dbName | |

| 1 | default |

| 2 | mydb |

Get a list of tables and corresponding file locations

hive_table(conn, [dbname]) Get a list of tables and locations of their corresponding HDFS files from the specified database, which is the default database when parameter dbname is absent. It lists all tables when no option works; @o option enables to list ORC tables, and @p option lists Parquet tables.

| A | B | |

| 1 | =hive_open("hdfs://192.168.0.76:9000", "thrift://192.168.0.76:9083","default","hive") | |

| 2 | =hive_table(A1) | /List all tables in the default database |

| 3 | =hive_table@o(A1) | /List ORC tables in the default tables |

| 4 | =hive_table@p(A1) | /List Parquet tables in the default tables |

| 5 | ||

| 6 | =hive_table(A1, "mydb") | /List all tables in mydb database |

| 7 | =hive_table@o(A1, "mydb") | /List ORC tables in mydb database |

| 8 | =hive_table@p(A1, "mydb") | /List Parquet tables in mydb database |

| 9 | >hive_close(A1) |

Below is the result A3 returns:

| tableName | location | |

| 1 | tstage | hdfs://master:9000/user/hive/warehouse/tstage |

| 2 | inbound_orc | hdfs://master:9000/user/hive/warehouse/inbound_orc |

| 3 | store_orc | hdfs://master:9000/user/hive/warehouse/store_orc |

| 4 | orc_read | hdfs://master:9000/user/hive/warehouse/orc_read |

| 5 | store_orc2 | hdfs://master:9000/user/hive/warehouse/store_orc2 |

The result set lists ORC tables and locations of corresponding HDFS files in the default database.

Data retrieval

f.hdfs_import@pc([col,…]) function is used to retrieves ORC data and Parquet data. Parameter f is an ORC/Parquet file, a local file or an HDFS file. Parameter col specifies fields to be retrieved; all fields will be retrieved by default. @p option enables retrieved Parquet data; by default, the function reads ORC data. @c option enables cursor-style data retrieval; by default, the function retrieves data in the ordinary way.

1. Retrieving local ORC data

Use file(fileName) to load a local ORC file, and f.hdfs_import() to retrieve data from it.

| A | B | |

| 1 | =file("H:/tmp/data/store.orc") | /Load a local ORC file |

| 2 | =A1.hdfs_import() | /Retrieve all fields |

| 3 | =A1.hdfs_import("total","product") | /Retrieve total field and product field |

| 4 | ||

| 5 | =A1.hdfs_import@c() | /Use cursor to retrieve data |

| 6 | =A5.skip(100) | /Retrieve data by skipping the first 100 records |

| 7 | =A5.fetch(100) | /Fetch 100 records |

Below is the result A2 returns:

| Index | store | product | total |

| 1 | store_id_000001 | 1 | 211 |

| 2 | store_id_000001 | 3 | 1253 |

| 3 | store_id_000001 | 4 | 458 |

| 4 | store_id_000001 | 5 | 945 |

| … | … | … | … |

For a big ORC table in Hive, divide it if its size reaches the maximum HDFS block size. Each segment file has a fixed number of records. Use skip(N) to skip N records, or blocks that do not contain any of the target records. This approach is very suited to handle data display by page and other similar scenarios.

2. Retrieving HDFS ORC data

Use file(fileName) to load an HDFS ORC file, and f.hdfs_import() to retrieve data from it.

| A | B | |

| 1 | =file("hdfs://localhost:9000/user/86186/store.orc") | /Load an HDFS file |

| 2 | =A1.hdfs_import() | /Retrieve all fields |

| 3 | =A1.hdfs_import("total","product") | /Retrieve total field and product field |

| 4 | ||

| 5 | =A1.hdfs_import@c() | /Use cursor to retrieve data |

| 6 | =A5.skip(100) | /Retrieve data by skipping the first 100 records |

| 7 | =A5.fetch(100) | /Fetch 100 records |

Use the above-mentioned hive_table()/hive shell method to obtain location of the HDFS file an Hive table corresponds to. And use f.hdfs_import() function to load and retrieve data.

3. Retrieving HDFS data via the Hive table

Work with hive_table() to parse HDFS file addresses from Hive table names, and retrieve data from HDFS files.

| A | B | |

| 1 | =hive_open("hdfs://192.168.0.76:9000", "thrift://192.168.0.76:9083","default","hive") | /Connect to Hive |

| 2 | =hive_table@o(A1) | /Get ORC tables and corresponding files |

| 3 | >hive_close(A1) | /Close the Hive connection |

| 4 | =A2.select(tableName=="nested_struct_t") | /Search for table nested_struct_t |

| 5 | =file(A4.location) | /Load the corresponding HDFS file |

| 6 | =A5.hdfs_import() | /Retrieve data from the HDFS file |

| 7 |

Below is the result A6 returns:

A4 searches for the table’s record from table sequence A2 through the Hive table name, gets the corresponding HDFS file, and retrieve data from it.

4. Using compound data structure

Both ORC format and Parquet format support compound data structures, such as Map, Nested and Array, so as to conveniently process structured data. Below we use my_orc_nested table and show code examples:

-- Creating table

CREATE TABLE my_orc_nested (

id INT,

name STRING,

address STRUCT<street:STRING, city:STRING, state:STRING>,

phones MAP<STRING, STRING>,

location ARRAY<STRING>

)STORED AS ORC;

– Inserting data

INSERT INTO TABLE my_orc_nested VALUES

(1, 'John', named_struct('street', '123 Main St', 'city', 'New York', 'state', 'NY'), map('home', '123-456-7890', 'work', '987-654-3210'), array('Charlie', 'David', 'Eve')),

(2, 'Jane', named_struct('street', '456 Elm St', 'city', 'San Francisco', 'state', 'CA'), map('home', '111-222-3333'), array('Beijing', 'Herbin')),

(3, 'master', named_struct('street','John Doe', 'city','Chongqing', 'state','BJ'), map('phone','2233445566'),array('Chongqing', 'Chengdu'));

When sql insert script is used in Hive shell to add new data, we need to use the corresponding function to handle the compound data structure. named_struct(), map() and array() functions respectively handle nested structure, Map structure and array structure.

| A | B | |

| 1 | =file("hdfs://localhost:9000/user/86186/hive/my_orc_nested") | |

| 2 | =A1.hdfs_import ("name", "phones", "address") | /Retrieve three fields: name, phones and address |

| 3 | =A1.hdfs_import () | |

| 4 | =A3.(address).conj() | /Concatenate address data |

| 5 | =A3.(phones).(~.fname().count()) | /Count the number of phones |

| 6 | =A3.(location).(~.count()) | / Count the number of locations |

| 7 |

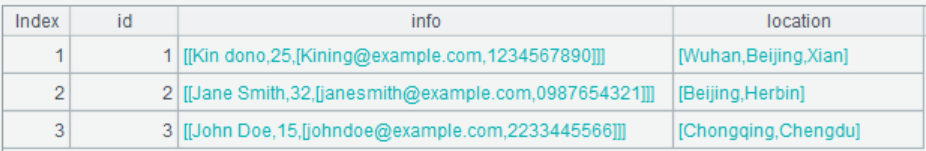

Below is the result A2 returns:

Filter fields and change the order of displayed fields.

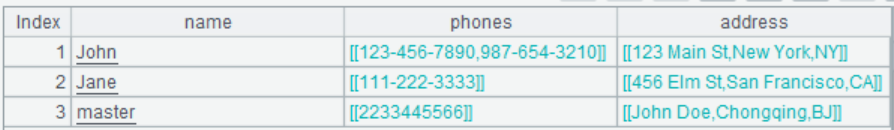

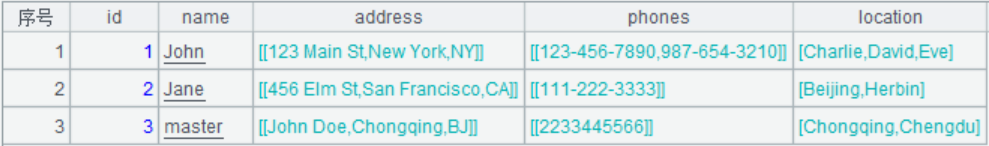

Below is the result A3 returns:

In the returned result, the nested structure returns a table sequence, Map structure returns a single-record table sequence, and Array structure returns a sequence.

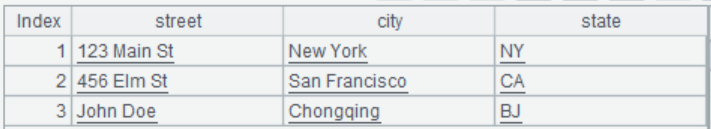

Below is the result A4 returns:

Concatenate the address data of nested structure for the convenience of subsequent computations.

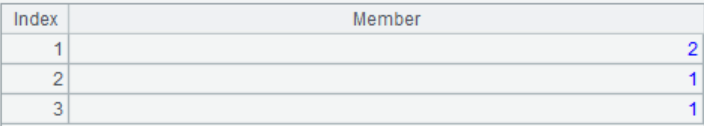

Below is the result A5 returns:

Count the number of phones records of Map structure according to key values.

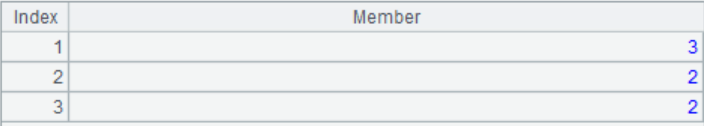

Below is the result A6 returns:

Count the number of location records of array structure.

Retrieving records of compound data structures is similar to Parquet and ORC data retrieval. And use a proper method to perform the data retrieval according to the characteristics of storage structure as needed. SPL’s conversion of the nested structure to a flat structure particularly makes computations convenient.

5. Retrieving Parquet data

Similar to ORC data retrieval, we also use f.hdfs_import@pc([col1,…]) to retrieve Parquet data in the same way. And in a cursor, skip()function works the same way (Just skip details here). As the basic ways of handling compound data structures via table sequences have been explained, we move on to explain how to convert a nested structure to a flat structure and how to retrieve values from an array.

Below are scripts of creating table and adding data using Hive shell:

CREATE TABLE nested_struct_t (

id INT,

info struct<name:STRING, age:INT, contact:struct<email:STRING, phone:STRING>>,

location ARRAY<STRING>

)

STORED AS PARQUET;

INSERT INTO TABLE nested_struct_t VALUES

(1, named_struct('name','Kin dono', 'age',25, 'contact',named_struct('email', 'Kining@example.com', 'phone','1234567890')), array('Wuhan', 'Beijing', 'Xian')),

(2, named_struct('name','Jane Smith', 'age',32, 'contact',named_struct('email','janesmith@example.com', 'phone','0987654321')), array('Beijing', 'Herbin')),

(3, named_struct('name','John Doe', 'age',15, 'contact', named_struct('email','johndoe@example.com','phone','2233445566')),array('Chongqing', 'Chengdu'));

| A | B | |

| 1 | =file("hdfs://localhost:9000/user/86186/nested_struct_test") | |

| 2 | =A1.hdfs_import@p() | /Retrieve the whole table |

| 3 | =A1.hdfs_import@p("id", "location", "location[0]", "location[2]") | /Perform filtering on the array field |

| 4 | =A1.hdfs_import@p("info", "info.name", "info.contact", "info.contact.email") | /Perform filtering on the nested field |

| 5 |

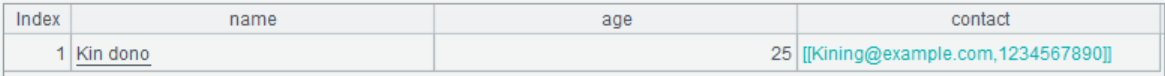

Below is the result A2 returns:

Click the first record under info field and we have this:

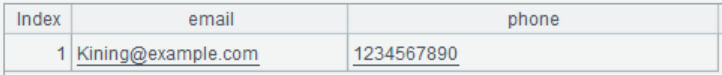

Click the first record under contact field and we have this:

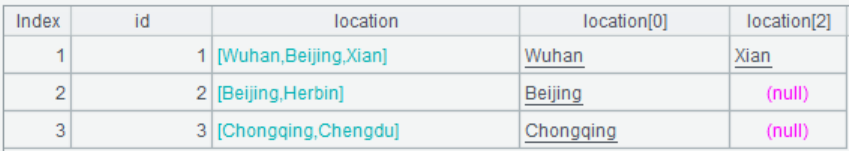

Below is the result A3 returns:

We can specify an index number to query values in an array. An out-of-boundary value is displayed as null.

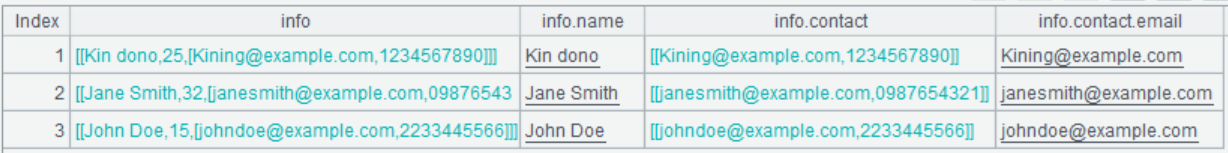

Below is the result A4 returns:

When querying nested structure data, we convert it to flat structured data in rows and columns.

SPL functions encapsulate Parquet and ORC file retrieval and create a consistent way for retrieving local files and HDFS files, greatly simplifying user operations and increasing efficiency.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/2bkGwqTj

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version