Remove Duplicate Rows

【Question】

I have a csv file and I have duplicate as well as unique data getting added to it on a daily basis. This involves too many duplicates. I have to remove the duplicates based on specific columns. Eg:

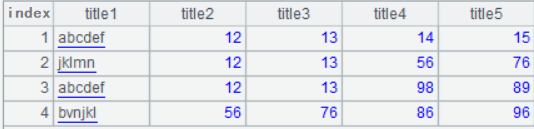

csvfile1:

title1 title2 title3 title4 title5

abcdef 12 13 14 15

jklmn 12 13 56 76

abcdef 12 13 98 89

bvnjkl 56 76 86 96

Now, based on title1, title2 and title3 I have to remove duplicates and add the unique entries in a new csv file. As you can see abcdef row is not unique and repeats based on title1, title2 and title3 so it should be removed.

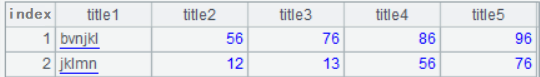

Expected output:

title1 title2 title3 title4 title5

jklmn 12 13 56 76

bvnjkl 56 76 86 96

My tried code is here below: CSVINPUT file import csv

f = open("1.csv", 'a+')

writer = csv.writer(f)

writer.writerow(("t1", "t2", "t3"))

a =[["a", 'b', 'c'], ["g", "h", "i"],['a','b','c']] #This list is changed daily so new and duplicates data get added daily

for i in range(2):

writer.writerow((a[i]))

f.close()

Duplicate removal script:

import csv

with open('1.csv','r') as in_file, open('2.csv','w') as out_file:

seen = set()# set for fast O(1) amortized lookup

for line in in_file:

if line not in seen: continue # skip duplicate

out_file.write(line)

My Output (2.csv):

t1 t2 t3

a b c

g h i

Now, I do not want a b c in the 2.csv based on t1 and t2 only the unique g h i based on t1 and t2

A solution offered by others but I don’t understand it:

import csv

with open('1.csv','r') as in_file, open('2.csv','w') as out_file:

seen = set()

seentwice = set()

reader = csv.reader(in_file)

writer = csv.writer(out_file)

rows = []

for row in reader:

if (row[0],row[1]) in seen:

seentwice.add((row[0],row[1]))

seen.add((row[0],row[1]))

rows.append(row)

for row in rows:

if (row[0],row[1]) not in seentwice:

writer.writerow(row)

【Answer】

You just need to group rows by the first 3 columns, get the groups containing only one member and then concatenate those groups. It’s simple and easy to understand to implement this structured computation in SPL (Structured Process Language):

| A |

|

| 1 |

=file("d:\\source.csv").import@t() |

| 2 |

=A1.group(title1,title2,title3).select(~.len()==1).conj() |

| 3 |

=file("d:\\result.csv").export@c(A2) |

A1: Read in the content of source.csv.

A2: Group records by the first 3 fields, find single-member groups and concatenate records in the selected groups.

A3: Write A2’s result set to the target file result.csv.

SPL Official Website 👉 http://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc

SPL Learning Material 👉 http://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/ydhVnFH9

Youtube 👉 https://www.youtube.com/@esProc_SPL