SPL: Access HDFS

Hadoop Distributed File System (HDFS) provides the Java API to read and write its files, and esProc encapsulates the JAVA methods for reading and writing HDFS files to easier-to-use SPL functions. With these functions, HDFS file can be loaded directly into esProc for calculation. This article describes in detail the usage of the functions.

Connect to HDFS by way of Java API

Create/close HDFS connections

SPL connects to HDFS with paired "create/close" in a similar way to database.

Create HDFS connections

hdfs_open(url, user), url is the address of the HDFS server, user specifies the operation user, and the default is "root".

Example:

A |

|

1 |

=hdfs_open("hdfs://192.168.0.8:9000", "root") |

Close HDFS connections

hdfs_close(hdfsConn), hdfsConn is the HDFS connection to be closed.

Example: A1 creates a connection, the middle steps perform some operations of reading, writing, and calculation, and then A3 closes the connection created by A1

A |

|

1 |

=hdfs_open("hdfs://192.168.0.8:9000", "root") |

2 |

…… |

3 |

=hdfs_close(A1) |

Operations on directories and files

Obtain file list/create directories/delete directories

hdfs_dir (hdfsConn, parent), hdfsConn is the HDFS connection, parent is the parent directory to be operated.

Example: Different options indicate different operations. A2 uses the @d option to get a list of directories under /user/root; A3 uses the @p option to get a full path list of directories and files under /user/root; A4 uses the @m option to create a subdirectory imageFolder under /user/root; A5 uses the @r option to delete the subdirectory imageFolder:

A |

|

1 |

=hdfs_open("hdfs://192.168.0.8:9000","root") |

2 |

=hdfs_dir@d(A1,"/user/root") |

3 |

=hdfs_dir@p(A1,"/user/root") |

4 |

=hdfs_dir@m(A1,"imageFolder") |

5 |

=hdfs_dir@r(A1,"imageFolder") |

6 |

=hdfs_close(A1) |

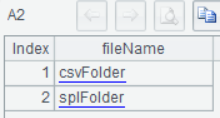

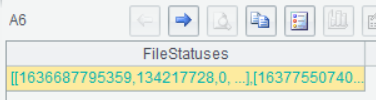

A2 lists two directories:

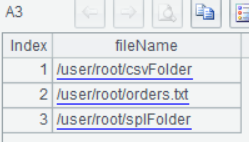

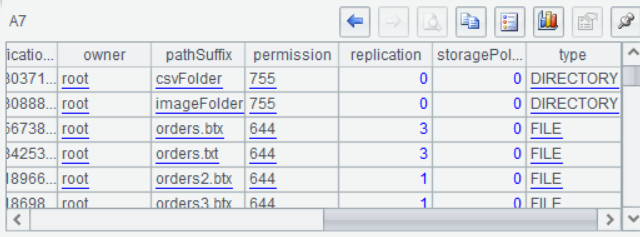

In addition to two directories, A3 also lists an orders.txt file:

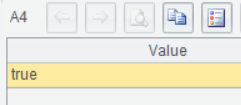

A4 and A5 return the true value after successful execution:

Upload to HDFS

hdfs_upload(hdfsConn, localFile, remoteFile), hdfsConn is the HDFS connection, localFile is the local file, and remoteFile is the target file to be uploaded to HDFS.

Example: When adding @d option in A3, localFile and remoteFile are both folders and will upload all the files in the folders.

A |

|

1 |

=hdfs_open("hdfs://192.168.0.8:9000", "root") |

2 |

=hdfs_upload(A1,"D:/employees.xlsx","/user/root/splFolder/employees.xlsx") |

3 |

=hdfs_upload@d(A1,"D:/txtFolder/","/user/root/txtFolder/") |

4 |

=hdfs_close(A1) |

Download HDFS files

hdfs_download(hdfsConn, remoteFile, localFile), hdfsConn is the HDFS connection, remoteFile is the file to be downloaded in HDFS, and localFile is the target file downloaded to local.

Example: Similar to uploading, the @d option is to download the whole folders.

A |

|

1 |

=hdfs_open("hdfs://192.168.0.8:9000", "root") |

2 |

=hdfs_download(A1,"/user/root/splFolder/employees.xlsx","D:/employees.xlsx") |

3 |

=hdfs_download@d(A1,"/user/root/txtFolder/","D:/txtFolder/") |

4 |

=hdfs_close(A1) |

Determine the existence of a file

hdfs_exists(hdfsConn, hdfsFile), hdfsConn is the HDFS connection, and hdfsFile is the HDFS file or folder to be determined.

Example:

A |

|

1 |

=hdfs_open("hdfs://192.168.0.8:9000", "root") |

2 |

=hdfs_exists(A1,"/user/") |

3 |

=hdfs_exists(A1,"/user/root/city.xlsx") |

4 |

=hdfs_close(A1) |

Read and write file data

Uploading and downloading HDFS files is the process of interacting with local files, but there are situations where data need to be read directly into memory for computation, or the data continuously generated in memory need to be saved directly to HDFS without relaying through local files (the probable reason is the files are too large to store locally or the relaying is too slow). SPL provides hdfs_file function which supports to read a small section of the file using cursor. It can read the file as it calculates, or calculate while appending the result data to the HDFS file.

Except for the common data file formats like text (txt/csv/json/xml) and Excel, SPL also provides high-performance bin files in binary format (btx).

Open HDFS files

hdfs_file (hdfsConn, hdfsFile:cs), hdfsConn is the HDFS connection, and hdfsFile is the file. If the file is in text format, use cs to specify the text charset (UTF-8, GBK, etc.).

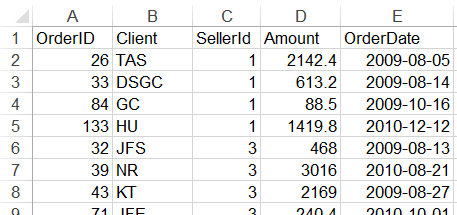

The data of the orders table are stored in three file formats: orders.txt, orders.xlsx, and orders.btx, and the following script performs reading and writing them.

A |

|

1 |

=hdfs_open("hdfs://192.168.0.8:9000", "root") |

2 |

=hdfs_file(A1,"/user/root/orders.txt":"UTF-8") |

3 |

=A2.read() |

4 |

=A2.import@t() |

5 |

=A2.cursor@t().fetch(2) |

6 |

=A2.export@t(T) |

7 |

=hdfs_file(A1,"/user/root/orders.xlsx") |

8 |

=A7.xlsimport@t() |

9 |

=A7.xlsexport@t(T) |

10 |

=hdfs_file(A1,"/user/root/orders.btx") |

11 |

=A10.import@b() |

12 |

=A10.cursor@b().skip(10).fetch(5) |

13 |

=A10.write@b(T) |

14 |

=hdfs_close(A1) |

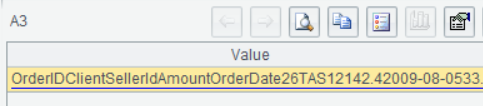

A2 opens the HDSF file orders.txt;

A3 reads the overall orders.txt as one big string:

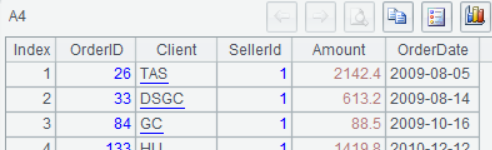

A4 loads orders.txt as a table sequence (the first row is the field name and the data in the row are split by TAB).

A5 loads the first two rows of orders.txt with a cursor, which is suitable for handling very large amounts of data because it can calculate as it loads data.

A6 writes the in-memory table sequence T to orders.txt.

A7 opens the Excel file orders.xlsx, A8 reads its data into a table sequence, and A9 writes the table sequence T back to orders.xlsx.

A10 opens the bin file orders.btx, A11 reads in the data, and A12 reads in with a cursor, skipping the first 10 records and reading the 11th to 15th records. A13 writes the table sequence T back to orders.btx.

Connect to HDFS by way of WEBHDFS

The above functions are all implemented through the JAVA APIs of HDFS, which requires importing the corresponding version of Hadoop jars and configuration files in the SPL environment. To avoid the trouble of deploying, Hadoop provides another HTTP REST API method of WEBHDFS. SPL also offers two functions based on this method: using webhdfs_file()function to read and write file; using webhdfs() function to perform all the remaining operations (such as uploading and downloading arbitrary files, getting HDFS directory and file list, changing access rights of files, getting storage scheme of HDFS files, etc.).

Read and write file data

webhdfs_file(fileUrl, params), fileUrl is url of the target file, and params is the parameters in the url.

The following takes the different data file types of orders for example:

A |

|

1 |

|

2 |

=webhdfs_file("http://localhost:50070/webhdfs/v1/user/root/orders.txt":"UTF-8","user.name=root") |

3 |

=A2.read() |

4 |

=A2.import@t() |

5 |

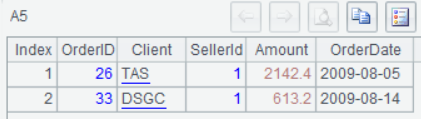

=A2.cursor@t().fetch(2) |

6 |

=A2.export@t(T) |

7 |

=webhdfs_file("http://localhost:50070/webhdfs/v1/user/root/orders.xlsx","user.name=root") |

8 |

=A7.xlsimport@t() |

9 |

=A7.xlsexport@t(T) |

10 |

=webhdfs_file("http://localhost:50070/webhdfs/v1/user/root/orders.btx","user.name=root") |

11 |

=A10.import@b() |

12 |

=A10.cursor@b().skip(10).fetch(5) |

13 |

=A10.write@b(T) |

14 |

Due to the use of http, webhdfs does not need a persistent connection which also dispenses with the open/close action in A1 and A14.

Operations on various data files are the same as the previous hdfs_file, such as overall reading, overall writing, cursor reading, and append writing. However, the bin file needs random-writing when appending data, which is not supported in Java APIs of HDFS, so it also does not support the appending write of the bin file.

Both of the functions can read file data, but each has its own advantages and disadvantages: hdfs_file()function needs to deploy a specific version of the Hadoop environment and is difficult to integrate, but has better performance; webhdfs_file() function is easy to use and compatible with different versions of HDFS, but its performance is slightly slower.

Universal functions

webhdfs(url, localFile), url is any operation in "HDFS WEBHDFS"; if the operation is to upload a file, then localFile is the local file to be uploaded; if the operation is to download a file, then localFile is the target file downloaded to local; for other operations, the localFile parameter is not needed and can be omitted.

Example:

A |

|

1 |

=webhdfs("http://localhost:50070/webhdfs/v1/user/root/f1.zip ?op=CREATE&user.name=root","d:/f1.zip") |

2 |

=webhdfs("http://localhost:50070/webhdfs/v1/user/root/f2.png ?op=CREATE&user.name=root&overwrite=true","d:/f2.png") |

3 |

=webhdfs("http://localhost:50070/webhdfs/v1/user/root/f3.txt ?op=APPEND&user.name=root","d:/f3_part5.txt") |

4 |

=webhdfs("http://localhost:50070/webhdfs/v1/user/root/f4.xlsx ?op=OPEN&user.name=root","d:/f4.xlsx") |

5 |

=webhdfs("http://localhost:50070/webhdfs/v1/user/root/?op=LISTSTATUS&user.name=root") |

6 |

=json(A5) |

7 |

=A6.FileStatuses.FileStatus |

8 |

=webhdfs("http://localhost:50070/webhdfs/v1/user/root/orders.txt ?op=SETOWNER&owner=user1&group=grp2") |

A1 uploads f1.zip.

A2 uploads f2.png with overwrite=true in the parameters, and the file will be replaced by force if it already exists.

A3 uploads f3_part5.txt and appends it to f3.txt.

A4 downloads f4.xlsx. This universal function can upload and download any file, including data files such as txt and xlsx;

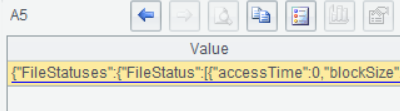

A5 gets a list of files in the /user/root/ directory. All the operations of WEBHDFS return either nothing or a result in JSON format .

A6 converts the JSON string returned by A5 into a nested multi-layer table sequence.

A7 takes the required layer of the table sequence from A6 and can view the details of the file list.

A8 sets the owner of the HDFS file. And other more operations will not be demonstrated in detail.

SPL Official Website 👉 http://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc

SPL Learning Material 👉 http://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/ydhVnFH9

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version