Here comes big data technology that rivals clusters on a single machine

Distributed data warehouses (such as MPP) in the era of big data are a popular technology, even to the point where data warehouses are referred to as distributed.

But is distributed data warehousing really necessary? After all, these distributed data warehouse products are not cheap, with high procurement and maintenance costs. Is there a low-cost and lightweight solution?

In fact, the amount of data involved in structured data computing tasks (the main goal of data warehouses) is usually not very large. For example, a bank with tens of millions of accounts has a transaction volume of hundreds of millions of transactions per year, roughly ranging from a few gigabytes to several tens of gigabytes; The data that an e-commerce system with millions of accounts can accumulate is still of this scale. Even for a few top companies with a huge amount of data, there are still a large number of tasks involving only a small amount of data. The cases that a single computing task involves hundreds of gigabytes of data are rare, and it is difficult to accumulate to the PB level claimed by many big data solution vendors.

It can also be seen from another aspect that the number of nodes in most distributed data warehouses is not very large, often around ten or less. The computing centers of top enterprises may have thousands or even tens of thousands of nodes, but a single task only uses a few to a dozen of these nodes. For example, the medium standard warehouse with a large sales volume in SnowFlake only has 4 nodes. This is the mainstream scale of distributed data warehouses. A task with a PB level data volume, where one node processes 1T (usually several hours), also requires 1000 nodes, which is obviously not the norm.

As claimed, a single database can easily handle data on a scale of tens of gigabytes, but in reality, it cannot. Batch jobs for several hours and querying for a few minutes at a time is also a common practice. As a result, users will start thinking about distributed computing.

Why is this?

There are two reasons for this. On the one hand, although the data volume of these computing tasks is not large, they have considerable complexity and often involve multiple associations. On the other hand, the SQL syntax used in the database cannot conveniently describe these complex operations, and the reluctantly written code cannot be optimized by the database, resulting in excessive computational complexity. In other words, SQL databases cannot fully utilize hardware resources and can only hope for distributed expansion.

esProc SPL can.

esProc SPL is an open-source lightweight computing engine, here: https://github.com/SPLWare/esProc. As a pure Java developed program, it can be seamlessly embedded into Java applications, providing a high-performance computing experience without the need for a database.

esProc SPL often outperforms MPP in terms of performance, with a single machine matching a cluster.

The star clustering task of the National Astronomical Observatory has a data scale of only about 50 million rows. It takes 3.8 hours for a distributed database to run 5 million rows using 100 CPUs, and it is estimated to take 15 days (square complexity) to complete 50 million rows. The esProc SPL runs a full capacity of 50 million data on a 16 CPU single machine in less than 3 hours.

The batch job of loan business in a certain bank, ran for 4300 seconds with a HIVE cluster of 10 nodes and 1300 rows of SQL ; esProc SPL ran for 1700 seconds on a single machine with 34 lines of code.

A certain bank’s anti money laundering preparation, Vertica ran for 1.5 hours on 11 nodes, and esProc SPL single machine ran for 26 seconds, unexpectedly turning the batch job into a query!

A certain e-commerce funnel analysis, SnowFlake’s medium standard cluster (4-node) cannot get a result after 3 minutes and user gave up. esProc SPL completed in 10 seconds on a single machine.

A certain spatiotemporal collision task, with a ClickHouse cluster of 5 nodes for 1800 seconds, was optimized by esProc SPL to a single machine for 350 seconds.

…….

These cases can further illustrate that there are not many cluster nodes for a large number of actual tasks, and almost all such scenarios can be solved by esProc using a single machine.

How does esProc SPL achieve this?

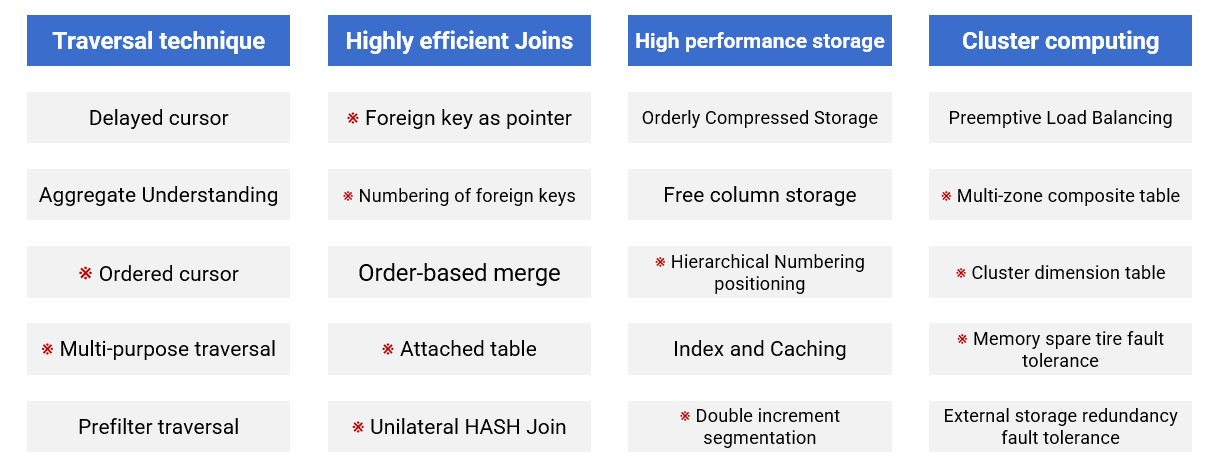

In terms of engineering, esProc also adopts commonly used MPP acceleration techniques such as compression, columnar storage, indexing, and vector computing; More importantly, esProc is no longer based on SQL, but instead uses its own programming language SPL, which includes many high-performance storage mechanisms and algorithm libraries that cannot be implemented based on SQL theory:

With these foundations, it is easy to write code with lower computational complexity, effectively avoiding the problem of excessive computation of SQL code, fully utilizing hardware resources, and achieving that a single machine matches a cluster.

Regarding the performance advantages of esProc, How the performance improvement by orders of magnitude happened has a popular explanation, SPL: a database language featuring easy writing and fast running explains in depth why SQL cannot write high-performance code.

The above figure lists some high-performance technologies of SPL, and it can be seen that esProc also supports cluster computing. However, due to the high performance of esProc, in practical tasks, only a single machine is used to achieve the ability of the original cluster. As a result, except for some simple cluster scenarios for high concurrency and hot standby, esProc’s cluster computing capabilities have not had the opportunity to be deeply honed, and to some extent, it can be said that they are not mature enough.

For a specific example, the spatiotemporal collision problem mentioned earlier has a total data volume of about 25 billion rows, and SQL does not seem very complex:

WITH DT AS ( SELECT DISTINCT id, ROUND(tm/900)+1 as tn, loc FROM T WHERE tm<3*86400)

SELECT * FROM (

SELECT B.id id, COUNT( DISINCT B.tn ) cnt

FROM DT AS A JOIN DT AS B ON A.loc=B.loc AND A.tn=B.tn

WHERE A.id=a AND B.id<>a

GROUP BY id )

ORDER BY cnt DESC

LIMIT 20

Traditional databases run too slowly, and users turned to ClickHouse for help. However, even in a 5-node cluster environment, they ran for more than 30 minutes and did not meet their expectations. With the same amount of data, the SPL code can complete calculations in less than 6 minutes with just one node, exceeding user expectations. Considering the gap in hardware resources, SPL is equivalent to more than 25 times faster than ClickHouse.

| A | |

|---|---|

| 1 | =now() |

| 2 | >NL=100000,NT=3*96 |

| 3 | =file("T.ctx").open() |

| 4 | =A3.cursor(tm,loc;id==a).fetch().align(NL*NT,(loc-1)*NT+tm\900+1) |

| 5 | =A3.cursor@mv(;id!=a && A4((loc-1)*NT+tm\900+1)) |

| 6 | =A5.group@s(id;icount@o(tm\900):cnt).total(top(-20;cnt)) |

| 7 | =interval@ms(A1,now()) |

(The SPL code is written in a grid, which is very different from ordinary programming languages. Please refer to here: A programming language coding in a grid.)

The DISTINCT calculation in SQL involves HASH and comparison, and when the data amount is large, the calculation amount will also be large. Furthermore, there will be self-join and further COUNT(DISTINCT), which will seriously slow down performance. SPL can fully utilize the ordered grouping and sequence number positioning that SQL does not have, effectively avoiding the high complexity of self-join and DISTINCT operations. Although there is no advantage in storage efficiency compared to ClickHouse, and Java may be slightly slower than C++, it still achieves an order of magnitude of performance improvement.

Running at a speed of 300 kilometers per hour does not necessarily require high-speed rail (distributed MPP), so can a family sedan (esProc SPL).

SPL Official Website 👉 http://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProc

SPL Learning Material 👉 http://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/ydhVnFH9

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version