Multidimensional Analysis Backend Practice 6: big fact table associate with small dimension table

Abstract

This series of articles explain how to perform multidimensional analysis (OLAP) in steps with detailed samples. Click to learn more in Multidimensional Analysis Backend Practice 6: big fact table associate with small dimension table. Multidimensional Analysis Backend Practice 6: big fact table associate with small dimension table

Aim of practice

This issue aims to associate all the new customer data in the customer wide table with multiple dimension tables such as the branch department table, and perform slice and grouping calculation with dimension table fields on the basis of last issue.

The steps of practice are:

1. Pre-process the customer table and perform the sequence numberization of the dimensions.

2. Pre-calculate the dimension tables and perform the association of each dimension.

3. Associate the customer data in wide table and the dimension tables, then perform slice and grouping: implemented by calling SPL with Java code.

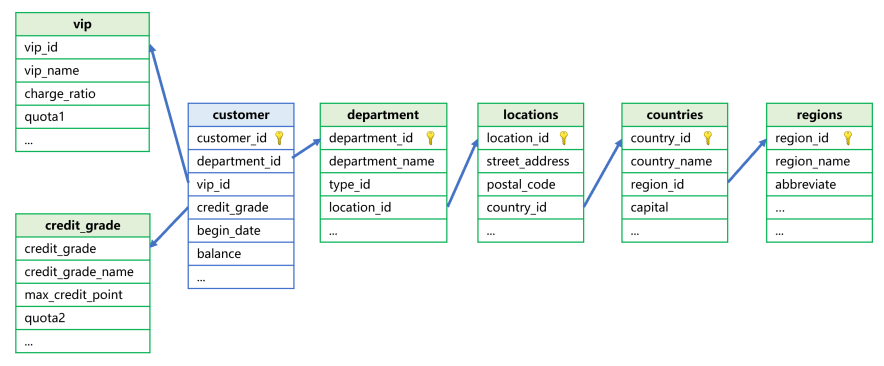

The associations between the customer table and each dimension table are shown in the picture:

The dimension tables include:

1. The branch department table “department”

2. The location table “location”

3. The country table “countries”

4. The region table “regions”

5. The VIP table “vip”

6. The credit grading table “credit_grade”

The dimension table fields are the same as those in last issue.

The purpose of multidimensional analysis calculation can be described in the following SQL statement of Oracle:

select v.interest_rate1,ct.country_id,c.job_id,sum(c.balance) sum,count(c.customer_id) count

from customer c

left join department d on c.department_id=d.department_id

left join locations l on d.location_id=l.location_id

left join countries ct on l.country_id=ct.country_id

left join regions r on r.region_id=ct.region_id

left join vip v on c.vip_id=v.vip_id

left join credit_grade cg on c.credit_grade=cg.credit_grade

where d.applause_rate in (26,30,33,65,67,74,75,77)

and r.region_name in ('Asia','Europe','Americas')

and v.charge_ratio in (9,11)

and cg.quota2 in(50000,80000)

and c.job_id in ('AD_VP','FI_MGR','AC_MGR','SA_MAN','SA_REP')

and c.flag1='1' and c.flag8='1'

group by v.interest_rate1,ct.country_id,c.job_id

Sequence numberization of dimension

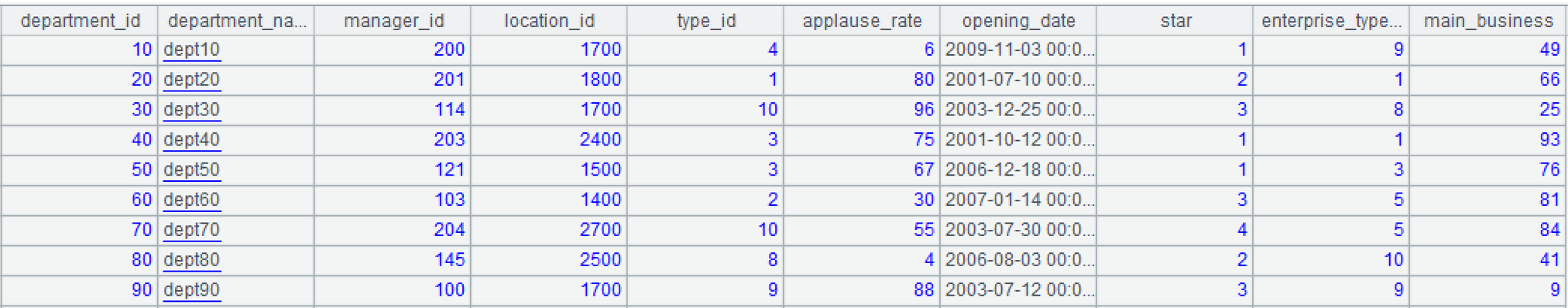

The data of branch department table are as follows:

What stores in the department_id field of the customer table is a manual code (as shown in the following picture) which needs to be converted to sequence numbers in the branch department table.

We can append conversion code in etl.dfx.

A |

|

1 |

=file("data/customer.ctx").open().cursor() |

2 |

=file("data/department.btx").import@b().keys(department_id).derive(#:num) |

3 |

=A1.switch(department_id,A2:department_id) |

4 |

=A3.new(department_id.num:department_id,job_num,employee_id,begin_date,customer_id,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8,vip_id,credit_grade) |

5 |

=file("data/customer雅 Dept.ctx").create@y(#department_id,#job_num,#employee_id,#begin_date,#customer_id,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8,vip_id,credit_grade).append(A4) |

A1: open the cursor based on customer composite table.

A2: read the data of branch department table, use department_id as the primary key, and add calculation on “num” column to save the sequence number.

A3: use the customer composite table cursor to associate A2.

A4: replace “department_id” with “num” in the cursor.

A5: export the converted cursor to customerDept.ctx, as shown in the following picture:

Pre-associate dimension tables

Modify init.dfx to load the dimension tables in memory and associate them. The code is as follows:

A |

B |

|

1 |

=file("data/job.btx").import@ib() |

>env(job,A1) |

2 |

=file("data/department.btx").import@b().keys(department_id) |

=file("data/vip.btx").import@b().keys(vip_id) |

3 |

=file("data/credit_grade.btx").import@b().keys(credit_grade) |

=file("data/locations.btx").import@b().keys(location_id) |

4 |

=file("data/countries.btx").import@b().keys(country_id) |

=file("data/regions.btx").import@b().keys(region_id) |

5 |

=A2.switch(location_id,B3.switch(country_id,A4.switch(region_id,B4))) |

|

6 |

=env(department,A5) |

=env(vip,B2) |

7 |

=env(credit_grades,A3) |

|

A1: retrieve the data of job from the bin file, and @i is used to read them as a sequence when there is only one column.

B1: save as global variable “job”.

A2-B4: retrieve the data of branch department, vip, credit grading, location, country, and region from the bin file respectively, and create primary keys.

A5: associate the “department”, “locations”, “countries” and “regions” tables according to their layers.

A6: save as global variable “department”. B6: save as global variable “vip”.

A7: save as global variable “credit_grades”.

The written init.dfx should be put in the main path of node, and it can be called automatically when the node is started or restarted.

Association calculation

Modify the olap.dfx and customer.dfx in previous issues.

The given parameters are still: the value of table name arg_table is customer, and other parameters are in json format. The arg_json smaple is as follows:

{

aggregate:

[

{

func:"sum",

field:"balance",

alias:"sum"

},

{

func:"count",

field:"customer_id",

alias:"count"

}

],

group:

[

"vip_id.interest_rate1:vip_id_interest_rate1",

"department_id.location_id.country_id.country_id:department_id_location_id_country_id",

"job_id"

],

slice:

[

{

dim:"department.applause_rate",

interval:null,

value:[26,30,33,65,67,74,75,77]

},

{

dim:"department.location_id.country_id.region_id.region_name",

value:["Asia","Europe","Americas"]

},

{

dim:"vip.charge_ratio",

value:[9,11]

},

{

dim:"credit_grades.quota2",

value:[50000,80000]

},

{

dim:"job_id",

value:["AD_VP","FI_MGR","AC_MGR","SA_MAN","SA_REP"]

},

{

dim:"flag1",

value:"1"

},

{

dim:"flag8",

value:"1"

}

]

}

There are department_detail.location_id, department_detail.applause_rate, etc. in “group” and “slice”. We should first convert the filtering conditions to the field conditions of the customer table itself, performing filtering before cursor creating, and then associate the customer table and the dimension tables when performing multidimensional analysis calculation.

The colon in department_detail.location_id.country_id.country_id:department_detail_location_id_country_id is used to give an alias for the attribute-lized foreign key field, which is used as the field name of the final result.

Step one: modify customer.dfx.

We should first modify customer.dfx before rewriting olap.dfx. Change the way we handle with arg_json parameter and the SPL code is:

A |

B |

C |

D |

|

1 |

Func |

|||

2 |

if A1.value==null |

return "between("/A1.dim/","/A1.interval(1)/":"/A1.interval(2)/")" |

||

3 |

else if ifa(A1.value) |

return string(A1.value)/".contain("/A1.dim/")" |

||

4 |

else if ifstring(A1.value) |

return A1.dim/"==\""/A1.value/"\"" |

||

5 |

else |

return A1.dim/"=="/A1.value |

||

6 |

func |

|||

7 |

=[] |

=A6.select(like(dim,B6/".*")) |

||

8 |

for C7 |

=[func(A1,B8)] |

||

9 |

>B7|=C8 |

|||

10 |

=${B6}.pselect@a(${B7.concat(" &&")}) |

=A6\C7 |

||

11 |

=C10.create().record([C6,null,B10]) |

|||

12 |

return B11|C10 |

|||

13 |

=json(arg_json) |

=date("2000-01-01") |

||

14 |

=A13.aggregate.(~.func/"("/~.field/"):"/~.alias).concat@c() |

|||

15 |

=A13.group.(if(~=="job_id","job_num",~)) |

|||

16 |

=A15.(if(~=="begin_yearmonth","begin_date\\100:begin_yearmonth",~)).concat@c() |

|||

17 |

=A13.aggregate.(field) |

=A15.(if(~=="begin_yearmonth","begin_date",~)) |

=(A17|B17).id() |

|

18 |

=[] |

|||

19 |

for C17 |

if like(A19,"department_id.*") |

>C17(#A19)="department_id" |

>A18|=["department_id,department:#"] |

20 |

if like(A19,"vip_id.*") |

>C17(#A19)="vip_id" |

>A18|=["vip_id,vip:#"] |

|

21 |

if like(A19,"credit_grade.*") |

>C17(#A19)="credit_grade" |

>A18|=["credit_grade,credit_grade:#"] |

|

22 |

=C17.id().concat@c() |

|||

23 |

=func(A6,A13.slice,"vip","vip_id") |

=func(A6,A23,"credit_grades","credit_grade") |

=func(A6,B23,"department","department_id") |

|

24 |

=[] |

|||

25 |

for C23 |

if A25.dim=="begin_date" && A25.value!=null |

>A25.value=int(interval@m(C13,eval(A25.value))*100+day(eval(A25.value))) |

|

26 |

else if A25.dim=="begin_date" && A25.value==null |

=A25.interval.(~=int(interval@m(C13,eval(~))*100+day(eval(~)))) |

||

27 |

else if A25.dim=="job_id" |

>A25.dim="job_num" |

||

28 |

>A25.value=A25.value.(job.pos@b(~)) |

|||

29 |

else if like(A25.dim, "flag?") |

>A25.value=int(A25.value) |

||

30 |

=[func(A1,A25)] |

>A24|=B30 |

||

31 |

=A13.group.(~.split(":")).(~=~.m(-1))|A13.aggregate.(alias) |

=A31(A31.pos("job_id"))="job(job_num):job_id" |

||

32 |

=A31(A31.pos("begin_yearmonth"))="month@y(elapse@m(date(\""/C13/"\"),begin_yearmonth)):begin_yearmonth" |

|||

33 |

=A31(A31.pos("begin_date"))="elapse@m(B4,begin_date\\100)+(begin_date%100-1):begin_date" |

|||

34 |

return A22,A14,A16,A24.concat("&& "),A31.concat@c(),A18.concat(";") |

|||

Area from A1 to C5 is subprogram which only executes when being called. The code is exactly the same as those in previous issues.

Area from A6 to C12 is a new added subprogram which will be introduced later when being called.

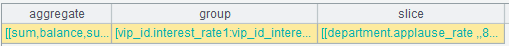

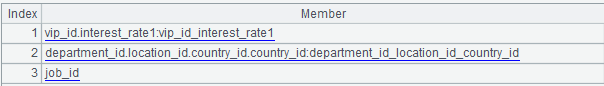

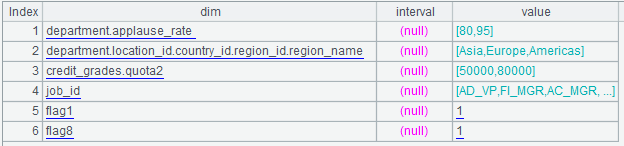

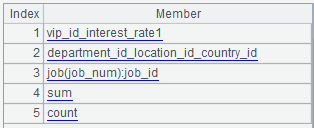

A12: parse arg_json to a table sequence and the return result is a nested multi-layer table sequence as follows:

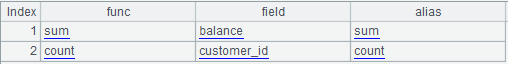

The “aggregate” in it is:

The “group” is:

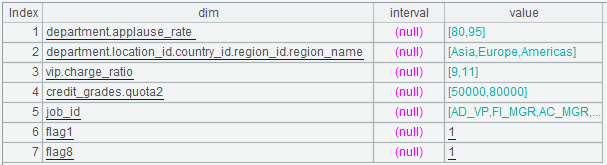

The “slice” is:

A14: first calculate “aggregate” to colon-concatenated string sequences and then concatenate the sequences to a string with comma: sum(balance):sum,count(customer_id):count, i.e., the aggregate expression.

A15: replace the job_id in “group” with job_num.

A16: convert the begin_yearmonth in “group” to integers.

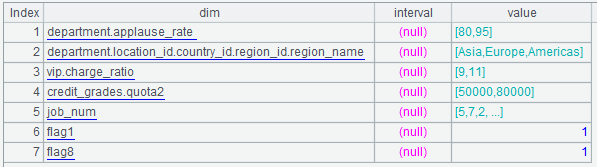

A17-C17: calculate the field names to be read from the composite table. And the result is:

Those fields in associated dimension tables need to be converted to fields of the composite table itself.

A18: define an empty sequence which prepares to store the dimension tables and fields to be associated.

A19: loop through C17, and the loop body is from B19 to C21.

B19: if A19 contains “department_id.*”, then it needs to associate with branch department table sequence.

C19: assign the corresponding member of C17 as “department_id”.

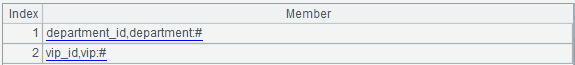

D19: add a member "department_id,department:#" in A18. the “department_id” of the customer table has already been sequence numberized, so we can directly write #.

B20-D20: process the “vip” dimension table in the same way.

B21-D21: process the “credit_grade” dimension table in the same way.

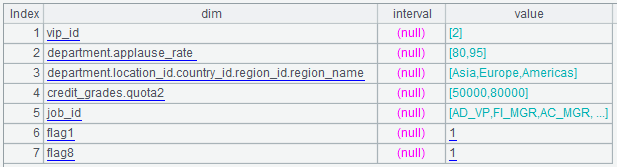

When the loop of A19 is over, the result of A18 is as follows:

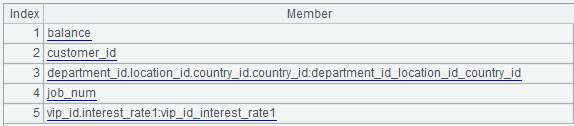

A22: de-duplicate the processed C17, and concatenate the result to a string with commas: "balance,customer_id,department_id,job_num,vip_id".

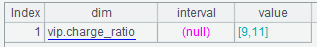

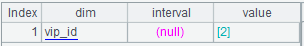

A23: use A13.slice, dimension table “vip” and dimension table field “vip_id” as the given parameters to call the A6 subprogram.

The parameters are stored in A6, B6 and C6 respectively. And A6 is:

Here the third member of A6 is vip.charge_ratio, and the purpose of subprogram is to process it as the filtering condition for “vip_id”, the field of the composite table itself.

B7: define an empty sequence which prepares to store the filtering conditions related to “vip” as follows:

B8: loop through C7, and the loop body is from C8 to C9.

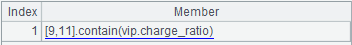

C8: call A1, and calculate B8 as the esProc expression:

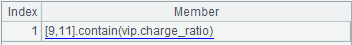

C9: union C8 in B7:

B10: use all the conditions in B7 to filter the dimension table “vip”, and obtain the sequence number of “vip”:

C10: remove C7 from A6:

B11: create a new record in C10:

B12: union B11 and the return result of C10, and end the A6 subprogram.

A23: after the subprogram returns, the filtering conditions related to “vip” in the A23 sequence are converted to the conditions in the vip_id field of the customer table as follows:

B23: convert the “credit_grade.*” conditions in A23 to the conditions in the credit_grade field of the customer table in the same way.

C23: convert the “department.*” conditions in A23 to the conditions in the department_id field of the customer table in the same way.

A24: define an empty sequence which prepares to store the slice (filtering conditions) expressions.

A25: loop through “slice” and the loop body is the area from B25 to C30 in which from B25 to C29 is the optimization conversion to the “value” or “interval” of “slice”.

B25: if the “dim” of A25 is begin_date and the “value” is not null, that is, the begin_date is a single date. In this case, C25 converts that single date to an integer and assigns it to “value”.

B26: if the “dim” of A25 is begin_date and the “value” is null, that is, the begin_date is within a range of dates. In this case, C25 converts the beginning and ending dates to integers and assigns them to “interval”.

B27: if the “dim” of A10 is job_id, that is, job_id is an enumeration value, for example, ["AD_VP","FI_MGR","AC_MGR","SA_MAN","SA_REP"].contain(job_id). In this case, C27 converts the “dim” of A10 to job_num and C28 converts the enumeration value “value” of A25 to the position in the sequence of global variable “job”, i.e., job_num integer sequence. For example: [5,7,2,15,16].

B29: if the “dim” of A25 is flag1, flag2, ..., flag8, that is, the flag bit equals to “1” or “0”. In this case, C29 converts the “value” of A25 from strings to integers.

B30: take the result of B25 to C29, which converts the “value” or “interval” of “slice” for performance optimization, as parameter to call subprogram A1.

The subprogram A1 (from B2 to B5) is identical to the func code in the first customer.dfx, which will not be elaborated here.

C30: append the return result of func A1 to A24. Continue the loop in A25 until it is over. And the sequence of slice expressions is well prepared.

A31: prepare the conversion expression of result set display value. Concatenate A13.group.(~.split(":")).(~=~.m(-1)) with A13.aggregate.alias sequence as follows:

A13.group.(~.split(":")).(~=~.m(-1)) is used to take the alias after the colon if there is one in the “group”, otherwise the value of “group” is directly taken.

C31: replace the job_id in A31 with conversion statement which is used to convert the job_num in result set to job_id.

A32-A33: generate expressions to convert the begin_date and begin_yearmonth in the result from integers to dates.

A34: return each expression string respectively:

The required fields: balance, customer_id, department_id, job_num, vip_id

The aggregate expression: sum(balance):sum,count(customer_id):count

The grouping expression;

vip_id.interest_rate1:vip_id_interest_rate1,department_id.location_id.country_id.country_id:department_id_location_id_country_id,job_num

The slice expression:

[2].contain(department_id)

&& [5,8].contain(credit_grade)

&& [2].contain(vip_id)

&& [5,7,2,15,16].contain(job_num)

&& flag1==1 && flag8==1

The result set display value conversion expression:

vip_id_interest_rate1,department_id_location_id_country_id,job(job_num):job_id,sum,count

The foreign key associate expression:

department_id,department:#;vip_id,vip:#

Step two: modify olap.dfx.

The cellset parameters are arg_table (whose value is customer) and arg_json. The SPL code is as follows:

A |

|

1 |

=call(arg_table/".dfx",arg_json) |

2 |

=file("data/"/arg_table/"Dept.ctx").open() |

3 |

=A2.cursor@m(${A1(1)};${A1(4)};2) |

4 |

=A3.switch(${A1(6)}) |

=A4.groups(${A1(3)};${A1(2)}) |

|

=A5.new(${A1(5)}) |

|

return A6 |

A1: call customer.dfx based on the value of parameter arg_table, the calling parameter is arg_json, and the return value is each expression string returned by customer.dfx.

A2: open the composite table based on the arg_table parameter.

A3: create a cursor on A2 with filtering conditions, and the statement actually executed is:

= A2.cursor@m(balance,customer_id,department_id,job_num,vip_id; [4,5,6,12,16,18,22,23,24,27].contain(department_id) && [5,8].contain(credit_grade) && [2].contain(vip_id) && [5,7,2,15,16].contain(job_num) && flag1==1 && flag8==1;2)

A4: associate the cursor with the dimension tables in memory, and the statement actually executed is:

=A3.switch(department_id,department:#;vip_id,vip:#)

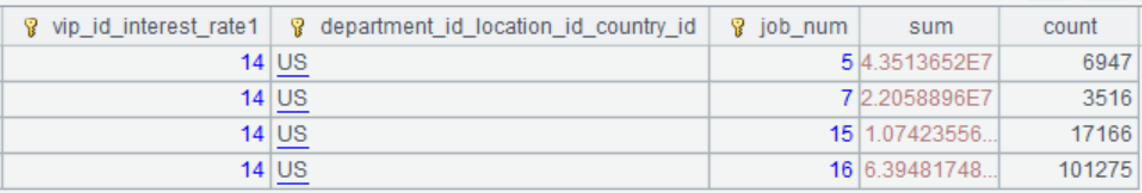

A5: perform small result set grouping on the associated cursor. And the statement actually executed is:

=A4.groups(vip_id.interest_rate1:vip_id_interest_rate1,department_id.location_id.country_id.country_id:department_id_location_id_country_id,job_num;sum(balance):sum,count(customer_id):count), and the execution result is:

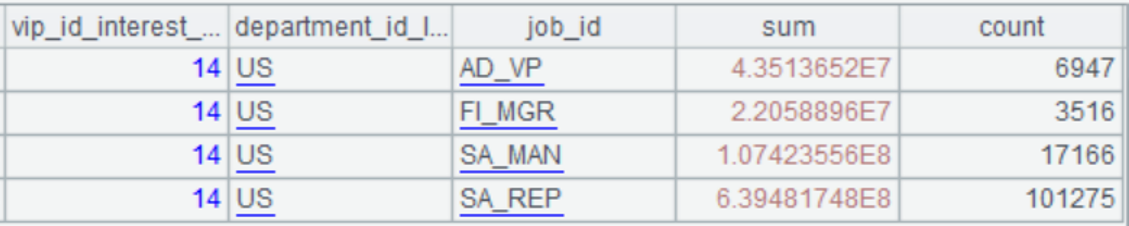

A6: convert the grouping results to display values, and the statement actually executed is:

=A5.new(vip_id_interest_rate1,department_id_location_id_country_id,job(job_num):job_id,sum,count), and the result is:

A7: return the calculation result.

Step three: call in Java code

After being written, olapMem.dfx can be called as a stored procedure during multidimensional analysis, and most of the Java code is the same as those in previous issues with differences in the name of dfx file, and the values of arg_table and arg_json. The Java code is:

public void testOlapServer(){

Connection con = null;

java.sql.PreparedStatement st;

try{

// create a connection

Class.forName("com.esproc.jdbc.InternalDriver");

// get the connection based on url

con= DriverManager.getConnection("jdbc:esproc:local://?onlyServer=true&sqlfirst=plus");

// call the stored procedure, and olap is the file name of dfx

st =con.prepareCall("call olap(?,?)");

st.setObject(1, "newCustomer");

st.setObject(2, "{aggregate:\[{func:\\"sum\\",field:\\"balance\\",alias:\\"sum\\"},{func:\\"count\\",field:\\"customer\_id\\",alias:\\"count\\"}\],group:\[\\"vip\_id.interest\_rate1:vip\_id\_interest\_rate1\\",\\"department\_id.location\_id.country\_id.country\_id:department\_id\_location\_id\_country\_id\\",\\"job\_id\\"\],slice:\[{dim:\\"department.applause\_rate \\",interval:null,value:\[26,30,33,65,67,74,75,77\]},{dim:\\"department.location\_id.country\_id.region\_id.region\_name\\",value:\[\\"Asia\\",\\"Europe\\",\\"Americas\\"\]},{dim:\\"vip.charge\_ratio\\",value:\[9,11\]},{dim:\\"credit\_grades.quota2\\",value:\[50000,80000\]},{dim:\\"job\_id\\",value:\[\\"AD\_VP\\",\\"FI\_MGR\\",\\"AC\_MGR\\",\\"SA\_MAN\\",\\"SA\_REP\\"\]},{dim:\\"flag1\\",value:\\"1\\"},{dim:\\"flag8\\",value:\\"1\\"}\]}");//arg\_json

// execute the stored procedure

st.execute();

// get the result set

ResultSet rs = st.getResultSet();

// continue to process the result set and show it out

}

catch(Exception e){

out.println(e);

}

finally{

// close the connection

if (con!=null) {

try {con.close();}

catch(Exception e) {out.println(e); }

}

}

}

The total execution time of Java code plus SPL is 3 seconds.

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/cFTcUNs7

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version