Multidimensional Analysis Backend Practice 3: Dimensions Sorting and Compression

Abstract

This series of articles explain how to perform multidimensional analysis (OLAP) in steps with detailed samples. Click to learn more in Multidimensional Analysis Backend Practice 3: Dimensions Sorting and Compression. Multidimensional Analysis Backend Practice 3: Dimensions Sorting and Compression

Aim of practice

This issue aims at achieving dimensions sorting and compression and further improving the computational speed based on the completion of data type conversion.

The steps of practice are:

1. Prepare the basic wide table: modify the code of last issue to perform dimensions sorting and compression and save as a new composite table file.

2. Access the basic wide table: keep the code of last issue and directly apply it to the new composite table.

3. Append newly added data: append newly added business data every day, and rearrange the data by full sorting every month. We should take every effort to not affect the performance of accessing the basic wide table, while reducing the required time of adding data daily.

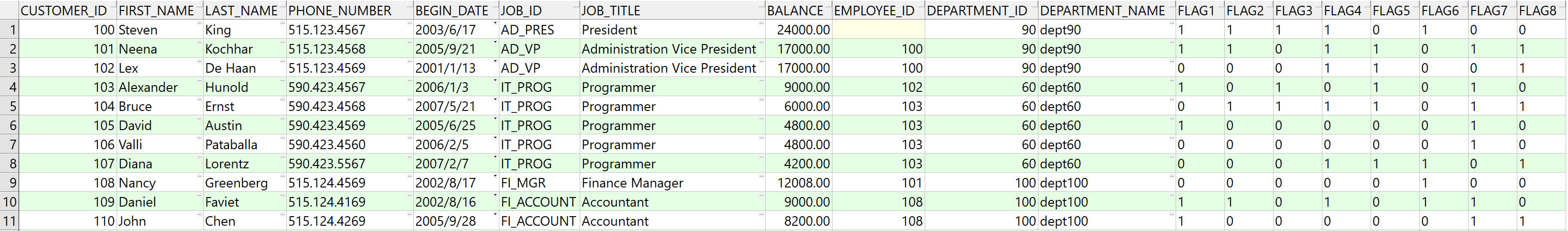

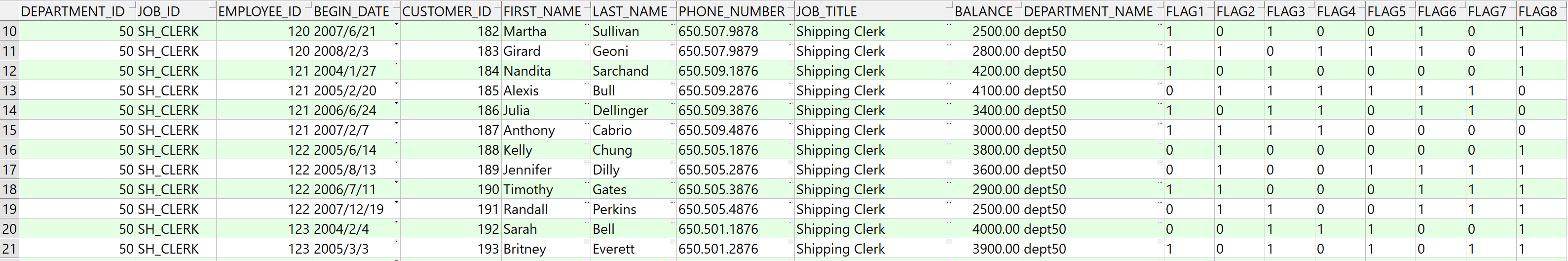

The wide table sample stays unchanged, i.e., customer table. The SQL statement of retrieving data from Oracle database is select * from customer, and the execution result is:

Suppose the current date is 2021-01-12, then the SQL for retrieving today’s newly added data will be:

select department_id,job_id,to_char(begin_date,'yyyymm') begin_month ,sum(balance) sum,count(customer_id) count

from customer

where department_id in (10,20,50,60,70,80)

and job_id in ('AD_VP','FI_MGR','AC_MGR','SA_MAN','SA_REP')

and begin_date>=to_date('2002-01-01','yyyy-mm-dd')

and begin_date<=to_date('2020-12-31','yyyy-mm-dd')

and flag1='1' and flag8='1'

group by department_id,job_id,to_char(begin_date,'yyyymm')

Prepare wide table

Dimensions sorting and compression: ordered columnar storage. “Columnar storage” means to store data in columnar form, and esProc will use columnar storage by default when creating new composite table.

“Ordered” means the field values are stored orderly in physics, that is, the data are sorted by dimension fields and then stored in the composite table. The order of dimension fields for sorting is very critical, and the dimensions with high repetition should be placed in the front.

The dimensions used in this sample include: department_id, job_num, employee_id, begin_date, and customer_id, in which the amount of department_id is the least (only 11 departments in the fact table), so its repetition ranks the highest. Among the other fields, the repetition of job_num, employee_id, begin_date, and customer_id decrease in order.

Meanwhile, the appearance of grouping fields is basically identical to the repetition in practice, so the order of sorting fields can be determined as: department_id,job_num,employee_id,begin_date,customer_id.

Next we use the database to do the sorting as follows:

select department_id,job_id,employee_id,begin_date,customer_id,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8

from customer order by department_id,job_id,employee_id,begin_date,customer_id

The execution result is:

Then we modify etl.dfx according to the above requirements, retrieving sorted data from database and generating composite table file to store the basic wide table after date type conversion. The code sample is:

A |

B |

|

1 |

=connect@l("oracle") |

|

2 |

=A1.cursor@d("select department_id,job_id,employee_id,begin_date,customer_id,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8 from customer order by department_id,job_id,employee_id,begin_date,customer_id") |

|

3 |

=A1.query@d("select job_id from jobs order by job_id") |

=file("data/job.btx").export@z(A3) |

4 |

=A3.(job_id) |

=date("2000-01-01") |

5 |

=A2.new(int(department_id):department_id,A4.pos@b(job_id):job_num,int(employee_id):employee_id,int(interval@m(B4,begin_date)*100+day(begin_date)):begin_date,int(customer_id):customer_id,first_name,last_name,phone_number,job_title,float(balance):balance,department_name,int(flag1):flag1,int(flag2):flag2,int(flag3):flag3,int(flag4):flag4,int(flag5):flag5,int(flag6):flag6,int(flag7):flag7,int(flag8):flag8) |

|

6 |

=file("data/customer.ctx").create@y(#department_id,#job_num,#employee_id,#begin_date,#customer_id,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8) |

|

7 |

=A6.append(A5) |

>A6.close(),A1.close() |

The code is the same as that in last issue except that the SQL statement in A2 is added with sorting and the composite table in A6 specifies the sorting field.

When the data volume is 100 million, the comparison between the exported composite tale file and those in previous issues is as follows:

Number of issue |

Size of file |

Description |

Note |

One |

3.5GB |

Directly exported from database without optimization |

|

Two |

3.0GB |

Data type optimization completed |

|

Three |

2.4GB |

Previous optimization and dimensions sorting and compression completed |

We can clearly see from the above table that the file size decreases by 14% (0.5GB) after completing the data type optimization, and further decreases by 20% (0.6GB) with an overall reduction of 31% (1.1GB) after completing dimensional sorting compression. Smaller files will reduce the amount of reading data from disk, thus effectively improving the performance.

Access wide table

The SPL code and Java code for accessing the basic wide table have no change compared with the previous issue.

Compare the total execution time of Java code plus the backend calculation of return results to those of the previous issues as follows:

Number of issue |

Single-thread |

Two-thread |

Note |

One |

84 seconds |

42 seconds |

|

Two |

31 seconds |

14 seconds |

|

Three |

9 seconds |

5 seconds |

As we can see from the above table, the dimensions sorting and compression further improves the computational performance.

Newly added data

There are newly added data in customer table every day, which need to be added to the composite table file regularly. If the composite table is sorted by date, then we just need to append the daily new data to the end of the file. However, if the customer table is sorted by field like department, then it will not be ordered as a whole after appending new data at the end of the file. Also, if the original data are rearranged every day along with the new data, it will take too much time to calculate.

Instead, we can read new data from the database, sort them by field like department, and append them with T.append@a() function. esProc will automatically create a patch file where daily new data are orderly merged, and the ordered merging will take relatively less time due to the small size of patch file.

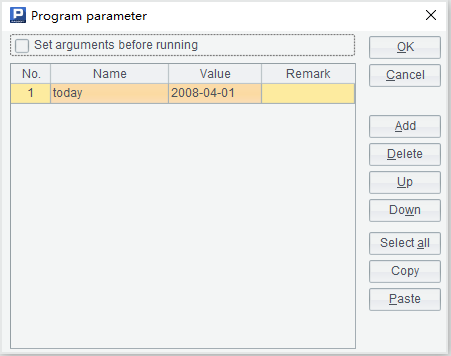

We write etlAppend.dfx based on this method, and the cellset parameter is:

The SPL code is as follows:

A |

B |

|

1 |

if day(today)==1 |

=file("data/customer.ctx").reset() |

2 |

=connect@l("oracle") |

|

3 |

=A2.cursor@d("select department_id,job_id,employee_id,begin_date,customer_id,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8 from customer where begin_date=? order by department_id,job_id,employee_id,begin_date ",today) |

|

4 |

=A2.query@d("select job_id from jobs order by job_id") |

|

5 |

=A4.(job_id) |

=date("2000-01-01") |

6 |

=A3.new(int(department_id):department_id,A5.pos@b(job_id):job_num,int(employee_id):employee_id,int(interval@m(B5,begin_date)*100+day(begin_date)):begin_date,int(customer_id):customer_id,first_name,last_name,phone_number,job_title,float(balance):balance,department_name,int(flag1):flag1,int(flag2):flag2,int(flag3):flag3,int(flag4):flag4,int(flag5):flag5,int(flag6):flag6,int(flag7):flag7,int(flag8):flag8) |

|

7 |

=file("data/customer.ctx").open().append@a(A6) |

|

8 |

>A7.close(),A2.close() |

|

A1: identify whether the input date is the first day of each month, if so, execute B1 to rearrange the customer composite table and merge the patch file formed by the new data in the customer composite table file orderly.

A2: connect to the oracle database.

A3: retrieve the data of the current day.

A4: retrieve the data of jobs table for type conversion.

A5, B5 and A6 are the same as etl.dfx in the previous issue.

A7: orderly merge today’s new added data in the patch file.

A8: close the file and the database connection.

etlAppend.dfx needs to be regularly executed each day and the execution is done by using ETL tools or OS timed tasks to invoke esProc script from the command line.

For example:

C:\Program Files\raqsoft\esProc\bin>esprocx d:\olap\etlAppend.dfx

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/cFTcUNs7

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version