Multidimensional Analysis Backend Practice 4: Pre-aggregate and Redundant Sorting

Abstract

This series of articles explain how to perform multidimensional analysis (OLAP) in steps with detailed samples. Click to learn more in Multidimensional Analysis Backend Practice 4: Pre-aggregate and Redundant Sorting. Multidimensional Analysis Backend Practice 4: Pre-aggregate and Redundant Sorting

Aim of practice

This issue aims to achieve pre-aggregate and redundant sorting, and further improve the computational speed on the basis of previous calculations.

The steps of practice are:

1. Pre-process the data: implement “partial pre-aggregate” and “redundant sorting of slice dimensions” according to the computational requirements on the basis of existing wide table.

2. Multidimensional analysis calculation: use the result of pre-processing to realize multidimensional analysis calculation.

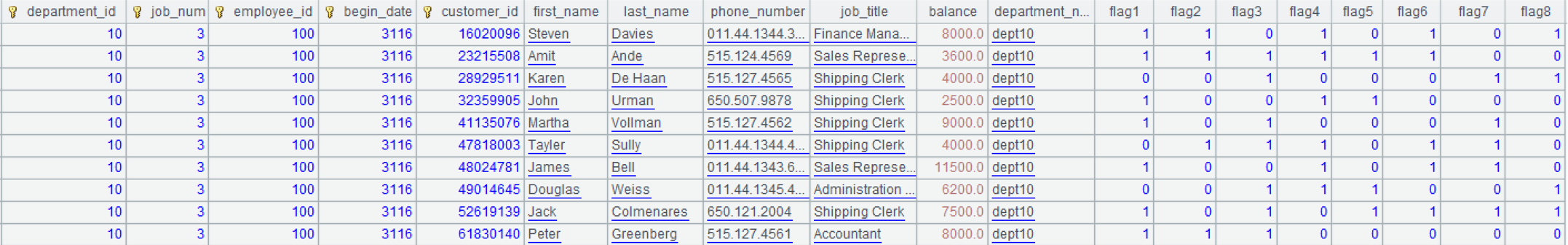

The basic data in this issue is the wide table customer.ctx generated in last issue, which is viewed with the data file tool of esProc as follows:

The main purpose of multidimensional analysis calculation remains the same, which can be described in the following SQL statement of Oracle:

SQL1:

select department_id,job_id,to_char(begin_date,'yyyymm') begin_month ,sum(balance) sum,count(customer_id) count

from customer

where department_id in (10,20,50,60,70,80)

and job_id in ('AD_VP','FI_MGR','AC_MGR','SA_MAN','SA_REP')

and begin_date>=to_date('2002-01-01','yyyy-mm-dd')

and begin_date<=to_date('2020-12-31','yyyy-mm-dd')

and flag1='1' and flag8='1'

group by department_id,job_id,to_char(begin_date,'yyyymm')

For SQL1, a cube file cu_1 containing all grouping and slice fields will be generated when pre-processing the data. cu_1 can be used to improve the performance when performing the multidimensional analysis calculation corresponding to SQL1 with esProc.

Add two calculation purposes based on SQL1 to testify the effects of pre-aggregate:

SQL2:

select department_id,job_id,sum(balance) sum,count(customer_id) count

from customer

where department_id in (10,20,50,60,70,80)

and job_id in ('AD_VP','FI_MGR','AC_MGR','SA_MAN','SA_REP')

and begin_date>=to_date('2002-01-01','yyyy-mm-dd')

and begin_date<=to_date('2020-12-31','yyyy-mm-dd')

and flag1='1' and flag8='1'

group by department_id,job_id

When using esProc to perform the multidimensional analysis calculation described as SQL2, because the grouping fields have one less begin_yearmonth than cu_1, we can’t get the result directly from cu_1, instead, we have to re-aggregate based on cu_1.

SQL3:

select department_id,job_id,to_char(begin_date,'yyyymm') begin_month ,sum(balance) sum,count(customer_id) count

from customer

where department_id in (10,20,50,60,70,80)

and job_id in ('AD_VP','FI_MGR','AC_MGR','SA_MAN','SA_REP')

and begin_date>=to_date('2002-01-01','yyyy-mm-dd')

and begin_date<=to_date('2020-12-31','yyyy-mm-dd')

and flag1='1' and flag8='1'

and flag3='1' and flag6='1'

group by department_id,job_id,to_char(begin_date,'yyyymm')

When using esProc to perform the multidimensional analysis calculation described in SQL3, because the slice fields have extra flag3 and flag6 compared with cu_1, we can’t use cu_1, but have to aggregate on the original wide table customer.ctx.

In addition, two more calculation purposes, SQL4 and SQL5, are added to verify the effects of redundant sorting and time period pre-aggregate respectively.

SQL4:

Select department_id,job_id,to_char(begin_date,'yyyymm') begin_month,department_id,job_id, sum(balance) sum,count(customer_id) count

from customer

where begin_date>=to_date('2006-01-01','yyyy-mm-dd')

and begin_date<=to_date('2006-01-12','yyyy-mm-dd')

group by to_char(begin_date,'yyyymm') ,department_id,job_id

SQL5:

Select sum(balance) sum,count(customer_id) count

from customer

where begin_date>=to_date('2002-01-01','yyyy-mm-dd')

and begin_date<=to_date('2020-12-31','yyyy-mm-dd')

Pre-process data

1. Partial pre-aggregate

Modify etl.dfx and add the following code at the end of the script.

A |

|

1 |

=file("data/customer.ctx").open() |

2 |

=A1.cuboid(cu_1,department_id,job_num,begin_date\100,begin_date,flag1,flag8;sum(balance):sum,count(customer_id):count) |

A1: open the composite table file.

A2: create a cube file cu_1 of partial pre-aggregate for composite table file. Please note that both slice and grouping fields as well as expressions like begin_date\100 should be included.

We need to append the daily new added data to the composite table. esProc will update the cube file automatically whether we append data directly like in the first issue or create a patch file to append data by rearranging the data every month like in the third issue.

The generated cube file and composite table file customer.ctx are in the same path, and the file name is customer.ctx__CUBOID@cu_1. When there are 100 million data in the composite table file, the cube file is 16M.

2. Redundant sorting

Modify etl.dfx and add the following code at the end of the script:

A |

B |

|

1 |

=file("data/customer.ctx").open().cursor() |

|

2 |

=A1.sortx(begin_date,department_id,job_num, employee_id,customer_id) |

|

3 |

=A2.new(begin_date,department_id,job_num,employee_id,customer_id ,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8) |

|

4 |

=file("data/customer_begin_date.ctx").create@y(#begin_date,#department_id,#job_num,#employee_id,#customer_id,first_name,last_name,phone_number,job_title,balance,department_name,flag1,flag2,flag3,flag4,flag5,flag6,flag7,flag8) |

|

5 |

=A4.append(A3) |

>A4.close() |

A1: create a cursor based on the composite table file.

A2: sort the composite table on external storage according to begin_date, department_id, job_num, employee_id, and customer_id.

A3: adjust the order of cursor fields.

A4: create a new composite table file customer_begin_date.ctx, and the sorting fields are begin_date, department_id, job_num, employee_id, and customer_id.

A5: output the sorted data to the new composite table file. B5: close the new composite table file.

When there are 100 million data in the composite table, the redundant file is 2.4G, which is basically the same size as the original composite table customer.ctx.

3. Time period pre-aggregate

Time period pre-aggregate needs to use fields of date type, therefore, begin_date cannot be pre-processed to small integers. We should use customer_begin_date.ctx to generate a new customerDate.ctx, and restore begin_date to date type with other fields unchanged.

Modify etl.dfx, and add the following code to the end of the script:

A |

|

1 |

=file("data/customerDate.ctx").open() |

2 |

=A1.cuboid(cu_date_year_month,month@y(begin_date);sum(balance),count(customer_id)) |

3 |

=A1.cuboid(cu_date_year,year(begin_date);sum(balance),count(customer_id)) |

A1: open the composite table.

A2: create a cube named cu_date_year_month, and use year and month as the aggregate dimension of time period pre-aggregate.

A3: create a cube named cu_date_year, and use year as the aggregate dimension of time period pre-aggregate.

Multidimensional analysis calculation

1. Partial pre-aggregate

Modify olap.dfx according to the requirements of SQL1, and change the ordinary grouping calculation to a partial pre-aggregate calculation.

The parameters of olap.dfx are still arg_table and arg_son.

The SPL code are modified as follows:

A |

|

1 |

=call(arg_table/".dfx",arg_json) |

2 |

=file("data/"/arg_table/".ctx").open() |

3 |

=A2.cgroups@m(${A1(3)};${A1(2)};${A1(4)}) |

4 |

=A3.new(${A1(5)}) |

5 |

return A4 |

The code stays the same except for A3.

A3: use the pre-generated cube file to filter the composite table and perform grouping and aggregate of a small result set, and here the name of cube is not specified. esProc will choose the most suitable cube automatically, i.e., cu_1. @m option is used for multi-thread parallel calculation, and the last parameter 2 of the function refers to two-thread.

The statement actually executed is:

=A2.cgroups(department_id,job_num,begin_date\100:begin_yearmonth; sum(balance):sum,count(customer_id):count; [10,20,50,60,70,80].contain(department_id) && [5,7,2,15,16].contain(job_num) && between(begin_date,2401:25131) && flag1==1 && flag8==1;2)

The Java code is not changed compared to the last issue. Compare the execution time of Java code plus backend calculation of return results with those of the previous issues as follows:

Number of issue |

Single-thread |

Two-thread |

Note |

One |

84 seconds |

42 seconds |

|

Two |

31 seconds |

14 seconds |

|

Three |

9 seconds |

5 seconds |

|

Four |

0.1 seconds |

0.1 seconds |

Partial pre-aggregate |

As we can see from the above table, partial pre-aggregate has a significant improvement in the computational performance.

As for SQL2, the above code does not need to change except for changing the “group” with parameter arg_json to:

group:

[

"department_id",

"job_id"

]

esProc needs to re-aggregate based on cu_1 when calculating.

As for SQL3, the code does not need to change either except for adding two conditions to the “slice” with parameter arg_json:

slice:

[

{

dim:"department_id",

value:[10,20,50,60,70,80]

},

{

dim:"job_id",

value:["AD_VP","FI_MGR","AC_MGR","SA_MAN","SA_REP"]

},

{

dim:"begin_date",

interval:[date("2002-01-01"),date("2020-12-31")]

},

{

dim:"flag1",

value:"1"

},

{

dim:"flag3",

value:"1"

},

{

dim:"flag6",

value:"1"

},

{

dim:"flag8",

value:"1"

}

]

esProc can not use cu_1 when calculating, because flag3 and flag6 are not in cu_1, instead, it has to use the original wide table to do filtering and aggregation.

The execution time of SQL1, SQL2, and SQL3 for the corresponding esProc SPL codes is compared as follows:

Number of issue |

Single-thread |

Two-thread |

Note |

SQL1 |

0.1 seconds |

0.1 seconds |

|

SQL2 |

0.1 seconds |

0.1 seconds |

|

SQL3 |

4 seconds |

4 seconds |

The execution time of re-aggregate on cu_1 is not changed much due to the small size of cu_1, therefore, the time of SQL1 and SQL2 is about the same. SQL3 can not use cu_1 and has to calculate from the original composite table, so it takes a little longer, which can be further improved by adding cu_1 field or creating new cube file cu_2.

It should be noted that because SQL3 adds two filtering conditions and retrieves less data from the composite table, the execution time is shorter than that in the third issue.

The comparison between the composite table file and cube file is:

File name |

Size of file |

Note |

Composite table |

2.4GB |

|

cube file |

16MB |

As can be seen from the above table, the cube file is relatively small compared with composite table. It takes up less storage but offers a significant improvement in computational performance, which is well worth the effort. Therefore, according to the actual situations of the multidimensional analysis system, we can create as many cube files as the disk allows for those high-frequency calculations, using space in exchange of time.

2. Redundant sorting

Adjust the “slice” in cellset parameter arg_json according to the requirements of SQL4.

slice:

slice:

[

{

dim:"begin_date",

interval:[date("2006-01-01"),date("2006-01-12")]

}

]

Modify customer.dfx according to the requirements of SQL4, adding a return parameter, i.e., the file name of redundant sorting composite table.

A |

B |

C |

|

1 |

func |

||

2 |

if A1.value==null |

return "between("/A1.dim/","/A1.interval(1)/":"/A1.interval(2)/")" |

|

3 |

else if ifa(A1.value) |

return string(A1.value)/".contain("/A1.dim/")" |

|

4 |

else if ifstring(A1.value) |

return A1.dim/"==\""/A1.value/"\"" |

|

5 |

else |

return A1.dim/"=="/A1.value |

|

6 |

=json(arg_json) |

=date("2000-01-01") |

|

7 |

=A6.aggregate.(~.func/"("/~.field/"):"/~.alias).concat@c() |

||

8 |

=A6.group.(if(~=="job_id","job_num",~)) |

||

9 |

=A8.(if(~=="begin_yearmonth","begin_date\\100:begin_yearmonth",~)).concat@c() |

||

10 |

=A6.aggregate.(field) |

=A8.(if(~=="begin_yearmonth","begin_date",~)) |

|

11 |

=(A10|C10).id().concat@c() |

||

12 |

=[] |

||

13 |

for A6.slice |

if A13.dim=="begin_date" && A13.value!=null |

>A13.value=int(interval@m(C6,A13.value)*100+day(A13.value)) |

14 |

else if A13.dim=="begin_date" && A13.value==null |

=A13.interval.(~=int(interval@m(C6,eval(~))*100+day(eval(~)))) |

|

15 |

else if A13.dim=="job_id" |

>A13.dim="job_num" |

|

16 |

>A13.value=A13.value.(job.pos@b(~)) |

||

17 |

else if like(A13.dim, "flag?") |

>A13.value=int(A13.value) |

|

18 |

=[func(A1,A13)] |

>A12|=B18 |

|

19 |

=A6.group|A6.aggregate.(alias) |

=A19(A19.pos("job_id"))="job(job_num):job_id" |

|

20 |

=A19(A19.pos("begin_yearmonth"))="month@y(elapse@m(date(\""/C6/"\"),begin_yearmonth)):begin_yearmonth" |

||

21 |

=A19(A19.pos("begin_date"))="elapse@m(B4,begin_date\\100)+(begin_date%100-1):begin_date" |

||

22 |

=if(A6.slice.(dim).pos("begin_date") && !A6.slice.(dim).pos("department_id"),"data/customer_begin_date.ctx","data/customer.ctx") |

||

23 |

return A11,A7,A9,A12.concat(" &&"),A19.concat@c(),A22 |

||

Area from A1 to A21 keeps unchanged.

A22: identify the “slice” in parameter arg_json: if it has begin_date dimension and no department_id dimension, then the result will return the file name of redundant sorting composite table customer_begin_date.ctx; if not, it will return the file name of original composite table customer.ctx.

A23: add a return parameter to return the file name of the composite table in A22.

Modify olap.dfx according to the requirements of SQL4, changing the ordinary grouping to redundant sorting and aggregation.

The parameters of olap.dfx are arg_tbale and arg_json.

The modified SPL is as follows:

A |

|

1 |

=call(arg_table/".dfx",arg_json) |

2 |

=file(A1(6)).open() |

3 |

=A2.cursor@m(${A1(1)};${A1(4)};2) |

4 |

=A3.groups(${A1(3)};${A1(2)}) |

5 |

=A4.new(${A1(5)}) |

6 |

return A5 |

All else remains the same except for A2 and A3.

A2: use the sixth parameter returned by customer.dfx, i.e., the file name, to open the composite table. If the slice fields only have begin_date and no department_id, then the statement actually executed is:

=file("data/customer_begin_date.ctx").open()

Otherwise, execute:

=file("data/customer.ctx").open()

A3: the statement actually executed is:

=A2.cursor@m(department_id,job_num,begin_date\100:begin_yearmonth; sum(balance):sum,count(customer_id):count; between(begin_date,7201:7212));2)

The Java code only changes the “slice” in parameter arg_json compared to the last issue, all else remaining the same. Compare the execution time of Java code plus backend calculation of return results with that of the cases without redundant sorting as follows:

Number of issue |

Single-thread |

Two-thread |

Note |

No redundant sorting |

2.3 seconds |

1.5 seconds |

|

Redundant sorting |

0.2 seconds |

0.2 seconds |

We can see from the above comparison that redundant sorting clearly improves computational performance.

The situations suitable for redundant sorting:

1) In the case of wide table in columnar storage, it is more suitable for redundant sorting if the slice field is at the back of the sorting fields.

For example: the sorting fields of wide table customer.ctx are department_id, job_num, employee_id, begin_date, and customer_id. In this case, using begin_date as the slice field requires traversing the whole begin_date column, which is slow to calculate. While the sorting fields of redundant sorting composite table customer_begin_date.ctx are begin_date, employee_id, department_id, job_num, and customer_id. The slice field begin_date is the first dimension in the composite table sorting, so we don’t need to traverse the whole begin_date column when using begin_date for slicing, which makes the calculation much faster.

2) Besides meeting the above situation, the fewer the number of records satisfying the filtering condition when slicing, the more effective the redundant sorting will be. For example: if the date filtering condition of begin_date is between year 2000 and year 2021, then basically all the data in the composite table will satisfy this condition. In this case, the time for slice calculation is almost as long as that for traversing, which makes the effect of redundant sorting less notable. However, the effect will be more effective if the date range is narrowed down and the slice condition can filter out more than 90% of the data.

3. Time period pre-aggregate

Modify the cellset parameter arg_json according to the requirements of SQL5:

{

aggregate:

[

{

func:"sum",

field:"balance",

alias:"sum"

},

{

func:"count",

field:"customer_id",

alias:"count"

}

],

group:

[

],

slice:

[

{

dim:"begin_date",

interval:[date("2002-01-01"),date("2020-12-31")],

value:null

}

]

}

Rewrite a customerDate.dfx to handle with arg_json and the code is as follows:

A |

B |

C |

||

1 |

func |

|||

2 |

if A1.value==null |

return "between("/A1.dim/","/A1.interval(1)/":"/A1.interval(2)/")" |

||

3 |

else if ifa(A1.value) |

return string(A1.value)/".contain("/A1.dim/")" |

||

4 |

else if ifstring(A1.value) |

return A1.dim/"==\""/A1.value/"\"" |

||

5 |

else |

return A1.dim/"=="/A1.value |

||

6 |

=json(arg_json) |

|||

7 |

=A6.aggregate.(~.func/"("/~.field/"):"/~.alias).concat@c() |

|||

8 |

=A6.group.(if(~=="job_id","job_num",~)) |

|||

9 |

=A8.(if(~=="begin_yearmonth","begin_date\\100:begin_yearmonth",~)).concat@c() |

|||

10 |

=A6.aggregate.(field) |

=A8.(if(~=="begin_yearmonth","begin_date",~)) |

||

11 |

=(A10|C10).id().concat@c() |

|||

12 |

=[] |

|||

13 |

for A6.slice |

if A13.dim=="job_id" |

>A13.dim="job_num" |

|

14 |

>A13.value=A13.value.(job.pos@b(~)) |

|||

15 |

else if like(A13.dim, "flag?") |

>A13.value=int(A13.value) |

||

16 |

=[func(A1,A13)] |

>A12|=B16 |

||

17 |

=A6.group|A6.aggregate.(alias) |

=A17(A17.pos("job_id"))="job(job_num):job_id" |

||

18 |

=if(A6.slice.(dim).pos("begin_date") && !A6.slice.(dim).pos("department_id"),"data/5customer_test1亿 _date.ctx","data/4customer_test1 亿.ctx") |

|||

19 |

return A11,A7,A9,A12.concat("&&"),A17.concat@c(),A18 |

|||

Compared to the previous customer.dfx, this script removes the code that converts begin_date from date to integer and then to date again, and the rest remains the same.

Modify olap.dfx according to the requirements of SQL5, changing the ordinary grouping to time period pre-aggregation.

The parameters of olap.dfx are agr_table (whose value is customerDate) and arg_json.

The modified SPL is as follows:

A |

|

1 |

=call(arg_table/".dfx",arg_json) |

2 |

=file(A1(6)).open() |

3 |

=A2.cgroups@m(${A1(3)};${A1(2)};${A1(4)};2) |

4 |

=A3.new(${A1(5)}) |

5 |

return A4 |

The code has no change except for A2 and A3.

A2: use the sixth parameter returned by customerDate.dfx, i.e., the file name, to open the composite table file. If the slice fields only have begin_date and no department_id, the statement actually executed is:

=file("data/customerDate.ctx").open()

Otherwise, execute:

=file("data/customer.ctx").open()

A3: use the pre-generated time period pre-aggregate cube file to perform full aggregation on composite table. Here the name of cube is not specified, because esProc will automatically choose the most suitable cube, that is, cu_date_year.

The statement actually executed is:

=A2.cgroups@m(;sum(balance):sum,count(customer_id):count;between(begin_date, date("2001-01-01"):date("2020-12-31"));2)

The Java code is still not changed compared with the last issue. Compare the execution time of Java code plus backend calculation of return results to the cases without time period pre-aggregate as follows:

Calculation method |

Single-thread |

Two-thread |

Note |

No time period pre-aggregate |

29 seconds |

18 seconds |

|

Time period pre-aggregate |

0.1 seconds |

0.1 seconds |

It’s clear in the above table that time period pre-aggregate also has a significant improvement in computational performance.

The size comparison between the composite table and cube file is as follows:

File name |

File size |

Note |

Composite table |

2.4GB |

|

Cube file |

16MB |

Year |

Cube file |

16MB |

Year and month |

SPL Official Website 👉 https://www.scudata.com

SPL Feedback and Help 👉 https://www.reddit.com/r/esProcSPL

SPL Learning Material 👉 https://c.scudata.com

SPL Source Code and Package 👉 https://github.com/SPLWare/esProc

Discord 👉 https://discord.gg/cFTcUNs7

Youtube 👉 https://www.youtube.com/@esProc_SPL

Chinese version